기계학습(Machine Learning) 알고리즘¶

정규화 방법론(Regularized Method, Penalized Method, Contrained Least Squares)¶

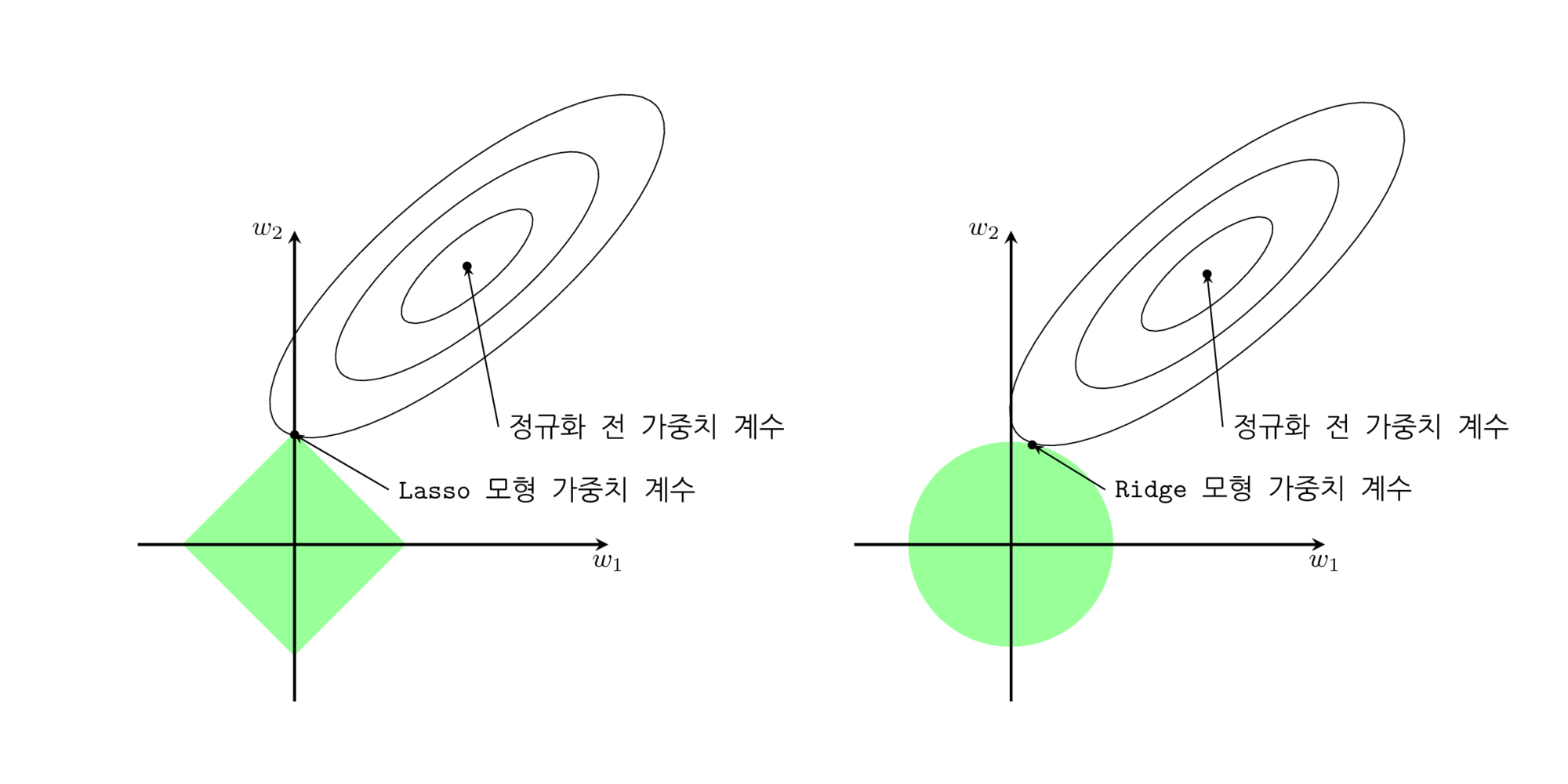

“선형회귀 계수(Weight)에 대한 제약 조건을 추가함으로써 모형이 과도하게 최적화되는 현상, 즉 과최적화를 막는 방법”

“과최적화는 계수 크기를 과도하게 증가하는 경향이 있기에, 정규화 방법에서의 제약 조건은 일반적으로 계수의 크기를 제한하는 방법”

정규화 회귀분석 알고리즘¶

0) Standard Regression:

1) Ridge Regression:

정규화조건/패널티/제약조건: 추정계수의 제곱합을 최소로 하는 것

\begin{align*} \hat{\beta} = arg\underset{\hat{\beta}}{min} \Biggl[\displaystyle \sum_{j=1}^t \Bigl(y_j - \displaystyle \sum_{i=0}^k \beta_i x_{ij}\Bigr)^2 + \lambda \displaystyle \sum_{i=0}^k \beta_i^2\Biggr] \ where~\lambda~is~hyper~parameter(given~by~human) \end{align*}

하이퍼파라미터(\(\lambda\)): 기존의 잔차 제곱합과 추가 제약 조건의 비중을 조절하기 위한 하이퍼모수(hyperparameter)

\(\lambda\)=0: 일반적인 선형 회귀모형(OLS)

\(\lambda\)를 크게 두면 정규화(패널티) 정도가 커지기 때문에 가중치(\(\beta_i\))의 값들이 커질 수 없음(작아짐)

\(\lambda\)를 작게 두면 정규화(패널티) 정도가 작아 지기 때문에 가중치(\(\beta_i\))의 값들의 자유도가 높아져 커질 수 있음(커짐)

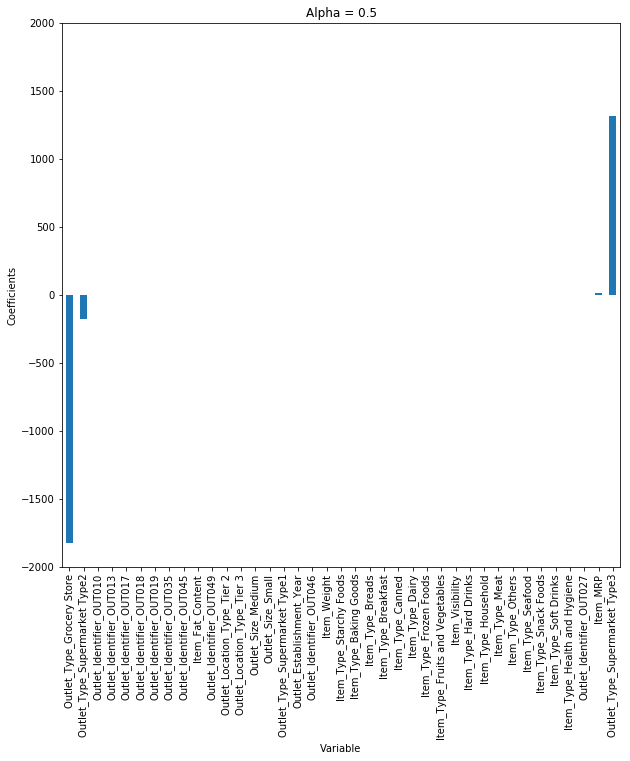

2) Lasso(Least Absolute Shrinkage and Selection Operator) Regression:

정규화조건/패널티/제약조건: 추정계수의 절대값 합을 최소로 하는 것

\begin{align*} \hat{\beta} = arg\underset{\hat{\beta}}{min} \Biggl[\displaystyle \sum_{j=1}^t \Bigl(y_j - \displaystyle \sum_{i=0}^k \beta_i x_{ij}\Bigr)^2 + \lambda \displaystyle \sum_{i=0}^k \left|\beta_i \right|\Biggr] \ where~\lambda~is~hyper~parameter(given~by~human) \end{align*}

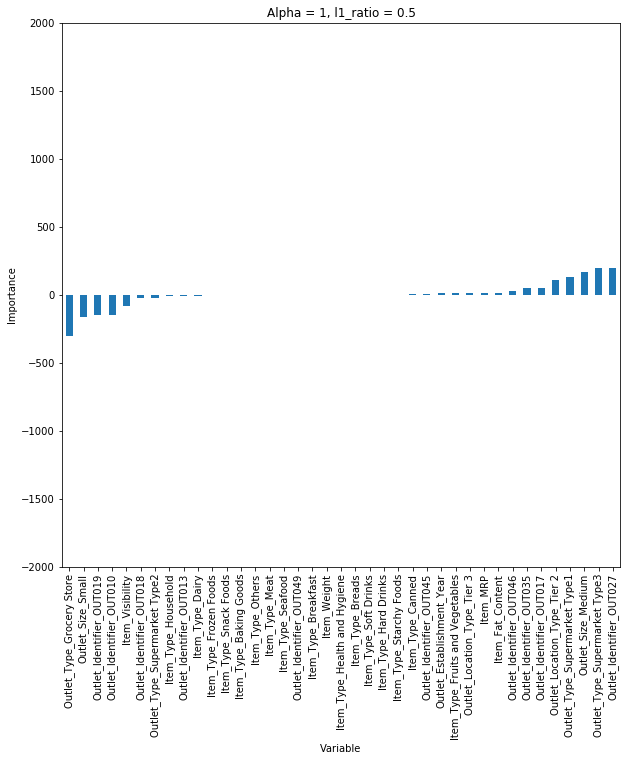

3) Elastic Net:

정규화조건/패널티/제약조건: 추정계수의 절대값 합과 제곱합을 동시에 최소로 하는 것

\begin{align*} \hat{\beta} &= arg\underset{\hat{\beta}}{min} \Biggl[\displaystyle \sum_{j=1}^t \Bigl(y_j - \displaystyle \sum_{i=0}^k \beta_i x_{ij}\Bigr)^2 + \lambda_1 \displaystyle \sum_{i=0}^k \left|\beta_i \right| + \lambda_2 \displaystyle \sum_{i=0}^k \beta_i^2\Biggr] \ &where~\lambda_1~and~\lambda_2~are~hyper~parameters(given~by~human) \end{align*}

하이퍼파라미터 특성 및 요약¶

최적 정규화(최적 하이퍼파라미터 추정): 하이퍼파라미터(Hyperparameter)에 따른 검증성능 차이 존재

Train Set: 하이퍼파라미터가 작으면 작을수록 좋아짐(과최적화)

Test Set: 하이퍼파라미터가 특정한 범위에 있을때 좋아짐(추정필요)

Summary

Standard:

Ridge: - 알고리즘이 모든 변수들을 포함하려 하기 때문에 계수의 크기가 작아지고 모형의 복잡도가 줄어듬

- 모든 변수들을 포함하려 하므로 변수의 수가 많은 경우 효과가 좋지 않으나 과적합(Overfitting)을 방지하는데 효과적

- 다중공선성이 존재할 경우, 변수 간 상관관계에 따라 계수로 다중공선성이 분산되기에 효과가 높음

LASSO:

- 알고리즘이 최소한의 변수를 포함하여 하기 때문의 나머지 변수들의 계수는 0이됨 (Feature Selection 기능)

- 변수선택 기능이 있기에 일반적으로 많이 사용되는 이점이 있지만 특정변수에 대한 계수가 커지는 단점 존재

- 다중공선성이 존재할 경우, 특정 변수만을 선택하는 방식이라 Ridge에 비해 다중공선성 문제에 효과가 낮음

Elastic Net:

- 큰 데이터셋에서 Ridge와 LASSO의 효과를 모두 반영하기에 효과가 좋음 (적은 데이터셋은 효과 낮음)

파라미터 세팅(실습)

1) “statsmodels”: 선형 회귀모형 클래스의 fit_regularized 메서드를 사용하여 Ridge/LASSO/Elastic Net 계수 추정

Ridge:

$\lambda_1 = 0,~~0 < \lambda_2 < 1 \\ => L_1 = 0,~~alpha \ne 0$ - **LASSO:**$0 < \lambda_1 < 1,~~\lambda_2 = 0 \\ => L_1 = 1,~~alpha \ne 0$ - **Elastic Net:**$0 < (\lambda_1, \lambda_2) < 1 \\ => 0 < L_1 < 1,~~alpha \ne 0$ 2) “sklearn”: 정규화 회귀모형을 위한 Ridge, Lasso, ElasticNet 별도 클래스 제공

$0 < (\lambda = alpha) < 1$ - [**LASSO:**](http://scikit-learn.org/stable/modules/generated/sklearn.linear_model.Lasso.html)$0 < (\lambda = alpha) < 1$ - [**Elastic Net:**](http://scikit-learn.org/stable/modules/generated/sklearn.linear_model.ElasticNet.html)$0 < (\lambda_1, \lambda_2) < 1 \\ => 0 < L_1 < 1,~~alpha \ne 0$

# Ridge

fit = Ridge(alpha=0.5, fit_intercept=True, normalize=True, random_state=123).fit(X_train, Y_train)

pred_tr = fit.predict(X_train)

pred_te = fit.predict(X_test)

# LASSO

fit = Lasso(alpha=0.5, fit_intercept=True, normalize=True, random_state=123).fit(X_train, Y_train)

pred_tr = fit.predict(X_train)

pred_te = fit.predict(X_test)

# Elastic Net

fit = ElasticNet(alpha=0.01, l1_ratio=1, fit_intercept=True, normalize=True, random_state=123).fit(X_train, Y_train)

pred_tr = fit.predict(X_train)

pred_te = fit.predict(X_test)

실습¶

import warnings

warnings.filterwarnings('always')

warnings.filterwarnings('ignore')

import pandas as pd

import numpy as np

from sklearn import datasets

import matplotlib.pyplot as plt

from sklearn.linear_model import Ridge, Lasso, ElasticNet

diabetes = datasets.load_diabetes()

X = diabetes.data

y = diabetes.target

print('Data View')

display(pd.concat([pd.DataFrame(y, columns=['diabetes_value']), pd.DataFrame(X, columns=diabetes.feature_names)], axis=1).head())

Data View

| diabetes_value | age | sex | bmi | bp | s1 | s2 | s3 | s4 | s5 | s6 | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 151.0 | 0.038076 | 0.050680 | 0.061696 | 0.021872 | -0.044223 | -0.034821 | -0.043401 | -0.002592 | 0.019908 | -0.017646 |

| 1 | 75.0 | -0.001882 | -0.044642 | -0.051474 | -0.026328 | -0.008449 | -0.019163 | 0.074412 | -0.039493 | -0.068330 | -0.092204 |

| 2 | 141.0 | 0.085299 | 0.050680 | 0.044451 | -0.005671 | -0.045599 | -0.034194 | -0.032356 | -0.002592 | 0.002864 | -0.025930 |

| 3 | 206.0 | -0.089063 | -0.044642 | -0.011595 | -0.036656 | 0.012191 | 0.024991 | -0.036038 | 0.034309 | 0.022692 | -0.009362 |

| 4 | 135.0 | 0.005383 | -0.044642 | -0.036385 | 0.021872 | 0.003935 | 0.015596 | 0.008142 | -0.002592 | -0.031991 | -0.046641 |

alpha_weight = 0.5

fit = Ridge(alpha=alpha_weight, fit_intercept=True, normalize=True, random_state=123).fit(X, y)

pd.DataFrame(np.hstack([fit.intercept_, fit.coef_]), columns=['alpha = {}'.format(alpha_weight)])

| alpha = 0.5 | |

|---|---|

| 0 | 152.133484 |

| 1 | 20.137357 |

| 2 | -131.242606 |

| 3 | 383.481783 |

| 4 | 244.837872 |

| 5 | -15.187056 |

| 6 | -58.344798 |

| 7 | -174.842798 |

| 8 | 121.985055 |

| 9 | 328.499702 |

| 10 | 110.886036 |

alpha_weight = 0.5

fit = Lasso(alpha=alpha_weight, fit_intercept=True, normalize=True, random_state=123).fit(X, y)

pd.DataFrame(np.hstack([fit.intercept_, fit.coef_]), columns=['alpha = {}'.format(alpha_weight)])

| alpha = 0.5 | |

|---|---|

| 0 | 152.133484 |

| 1 | 0.000000 |

| 2 | -0.000000 |

| 3 | 471.038733 |

| 4 | 136.507108 |

| 5 | -0.000000 |

| 6 | -0.000000 |

| 7 | -58.319549 |

| 8 | 0.000000 |

| 9 | 408.023324 |

| 10 | 0.000000 |

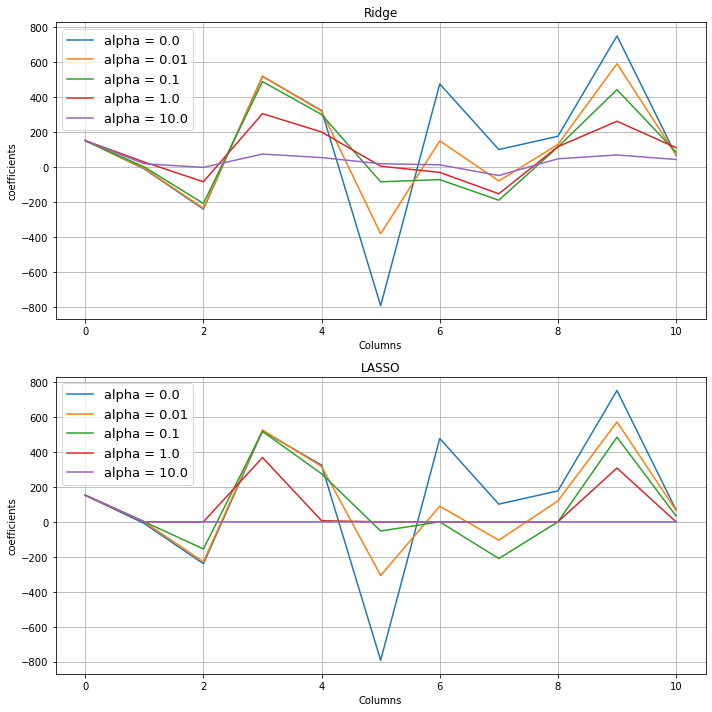

result_Ridge = pd.DataFrame()

alpha_candidate = np.hstack([0, np.logspace(-2, 1, 4)])

for alpha_weight in alpha_candidate:

fit = Ridge(alpha=alpha_weight, fit_intercept=True, normalize=True, random_state=123).fit(X, y)

result_coef = pd.DataFrame(np.hstack([fit.intercept_, fit.coef_]), columns=['alpha = {}'.format(alpha_weight)])

result_Ridge = pd.concat([result_Ridge, result_coef], axis=1)

result_LASSO = pd.DataFrame()

alpha_candidate = np.hstack([0, np.logspace(-2, 1, 4)])

for alpha_weight in alpha_candidate:

fit = Lasso(alpha=alpha_weight, fit_intercept=True, normalize=True, random_state=123).fit(X, y)

result_coef = pd.DataFrame(np.hstack([fit.intercept_, fit.coef_]), columns=['alpha = {}'.format(alpha_weight)])

result_LASSO = pd.concat([result_LASSO, result_coef], axis=1)

result_Ridge.plot(figsize=(10,10), legend=True, ax=plt.subplot(211))

plt.title('Ridge')

plt.xlabel('Columns')

plt.ylabel('coefficients')

plt.legend(fontsize=13)

plt.grid()

result_LASSO.plot(legend=True, ax=plt.subplot(212))

plt.title('LASSO')

plt.xlabel('Columns')

plt.ylabel('coefficients')

plt.legend(fontsize=13)

plt.tight_layout()

plt.grid()

plt.show()

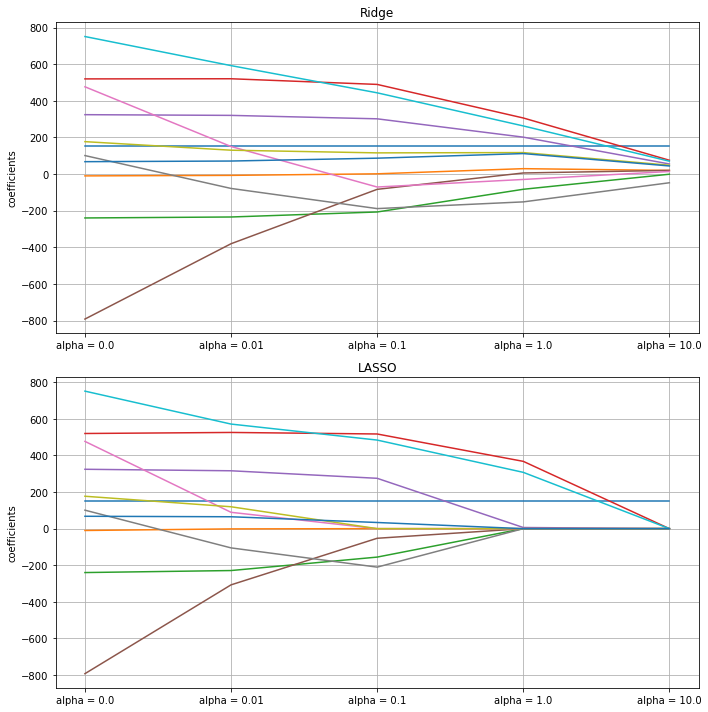

result_Ridge.T.plot(figsize=(10,10), legend=False, ax=plt.subplot(211))

plt.title('Ridge')

plt.xticks(np.arange(len(result_Ridge.columns)), [i for i in result_Ridge.columns])

plt.ylabel('coefficients')

plt.grid()

result_LASSO.T.plot(legend=False, ax=plt.subplot(212))

plt.title('LASSO')

plt.xticks(np.arange(len(result_Ridge.columns)), [i for i in result_Ridge.columns])

plt.ylabel('coefficients')

plt.tight_layout()

plt.grid()

plt.show()

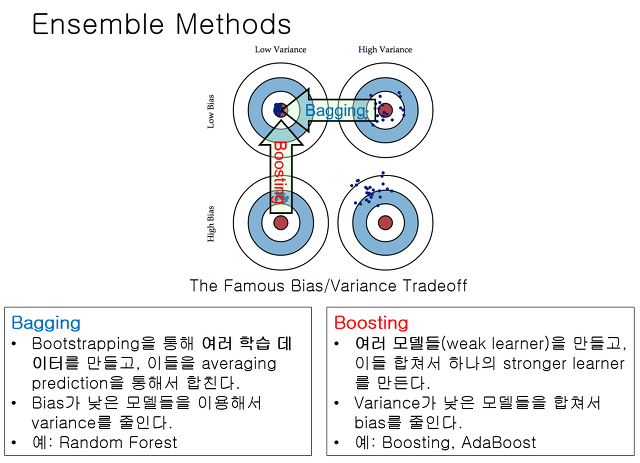

Bagging and Boosting 모델¶

편향-분산 상충관계(Bias-variance Trade-off)¶

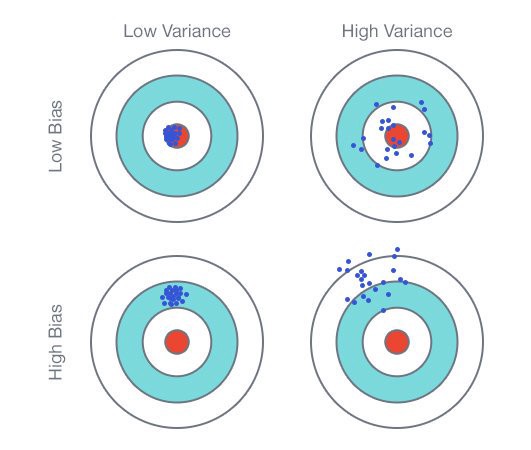

1) 편향과 분산의 정의

(비수학적 이해)

편향(Bias): 점추정

예측값과 실제값의 차이

모델 학습시 여러 데이터로 학습 후 예측값의 범위가 정답과 얼마나 멀리 있는지 측정

편향(Bias(Real)): 모형화(단순화)로 미처 반영하지 못한 복잡성

=> 편향이 작다면 Training 데이터 패턴(복잡성)을 최대반영 의미

=> 편향이 크다면 Training 데이터 패턴(복잡성)을 최소반영 의미분산(Variance): 구간추정

학습한 모델의 예측값이 평균으로부터 퍼진 정도(변동성/분산)

여러 모델로 학습을 반복한다면, 학습된 모델별로 예측한 값들의 차이를 측정

분산(Variance(Real)): 다른 데이터(Testing)를 사용했을때 발생할 변화

=> 분산이 작다면 다른 데이터로 예측시 적은 변동 예상

=> 분산이 크다면 다른 데이터로 예측시 많은 변동 예상

(수학적 이해)

\begin{align*} \text{Equation of Error} && Err(x) &= E\Bigl[\bigl(Y-\hat{f}(x)\bigr)^2 \Bigr] \ && &= \Bigl(E[\hat{f}(x)] - f(x)\Bigr)^2 + E \Bigl[\bigl(\hat{f}(x) - E[\hat{f}(x)]\bigr)^2 \Bigr] + \sigma_{\epsilon}^2 \ && &= \text{Bias}^2 + \text{Variance} + \text{Irreducible Error} \end{align*}

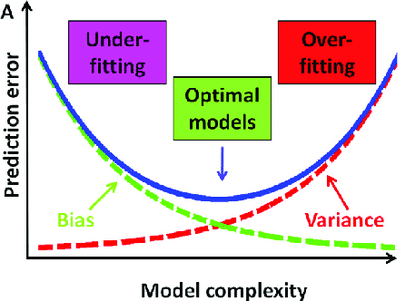

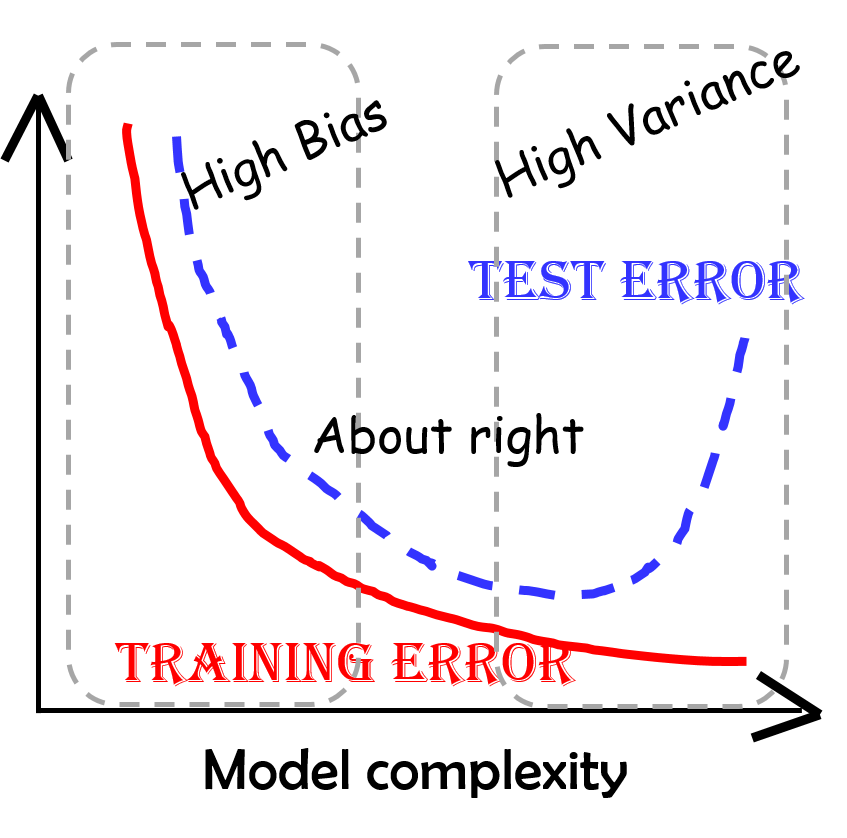

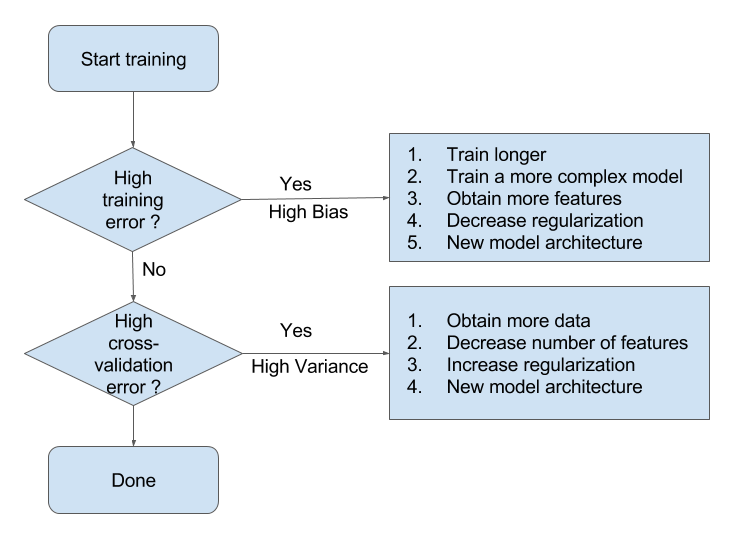

2) 편향과 분산의 관계

모델의 복잡도가 낮으면 Bias가 증가하고 Variance가 감소(Underfitting)

: 구간추정 범위는 좁으나 점추정 정확성 낮음

: Training/Testing 모두 예측력이 낮음모델의 복잡도가 높으면 Bias가 감소하고 Variance가 증가(Overfitting)

: 점추정 정확성은 높으나 구간추정 범위는 넓음

: Training만 잘 예측력 높고 Testing은 예측력 낮음Bias와 Variance가 최소화 되는 수준에서 모델의 복잡도 선택

3) 편향과 분산 모두를 최소화하는 방법

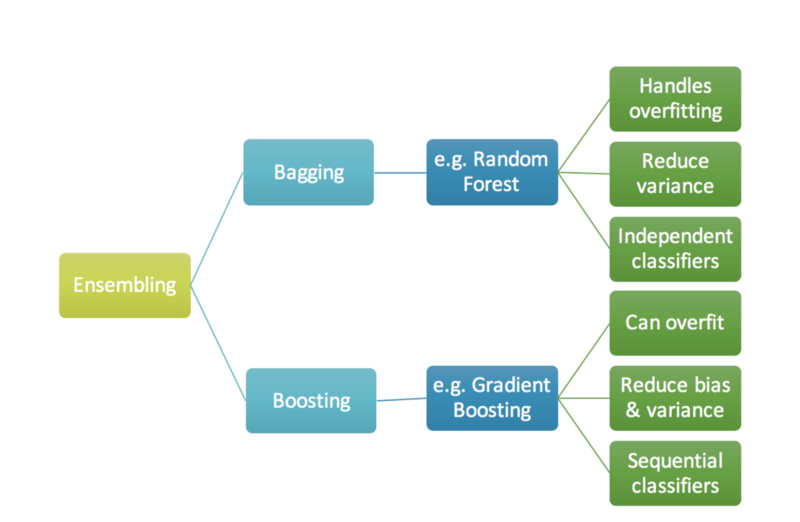

Bagging vs Boosting¶

앙상블(Ensemble, Ensemble Learning, Ensemble Method)이란 머신러닝에서 여러개의 모델을 학습시켜,

그 모델들의 예측결과들을 이용해 하나의 모델보다 더 나은 값을 예측하는 방법

Bagging(Bootstrap Aggregating):

부트스트래핑(Bootstraping): 예측값과 실제값의 차이 중복을 허용한 리샘플링(Resampling)

페이스팅(Pasting): 이와 반대로 중복을 허용하지 않는 샘플링

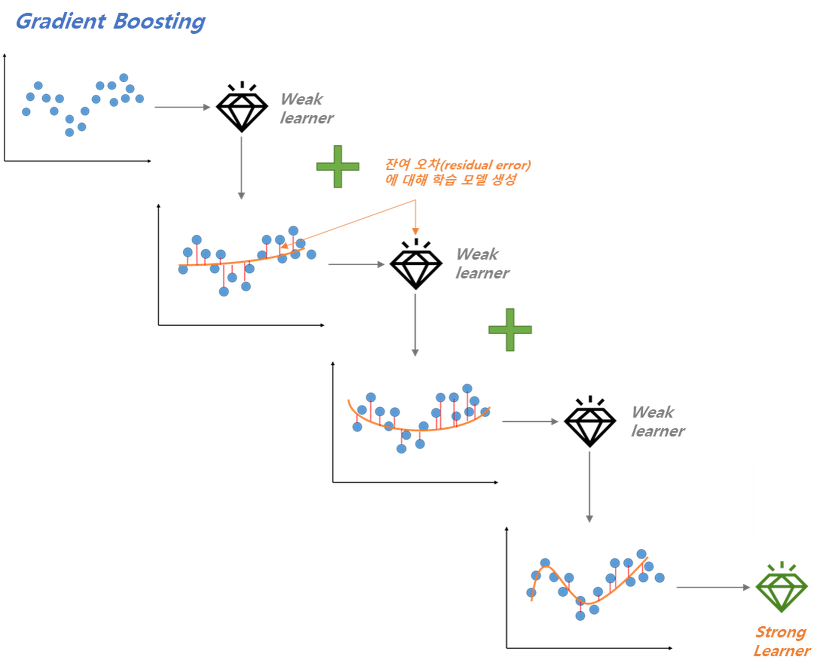

Boosting:

성능이 약한 학습기(weak learner)를 여러 개 연결하여 강한 학습기(strong learner)를 만드는 앙상블 학습

앞에서 학습된 모델을 보완해나가면서 더나은 모델로 학습시키는 것

- |

Bagging |

Boosting |

|---|---|---|

특징 |

병렬 앙상블 모델(각 모델은 서로 독립) |

연속 앙상블 모델(이전 모델의 오류 반영) |

목적 |

Variance 감소 |

Bias 감소 |

적합한 상황 |

Low Bias + High Variance |

High Bias + Low Variance |

Sampling |

Random Sampling |

Random Sampling with weight on error |

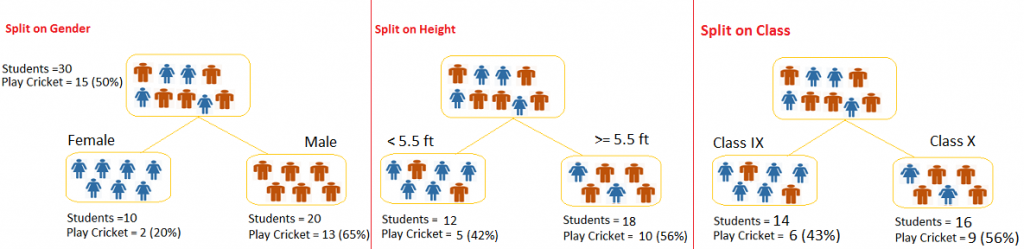

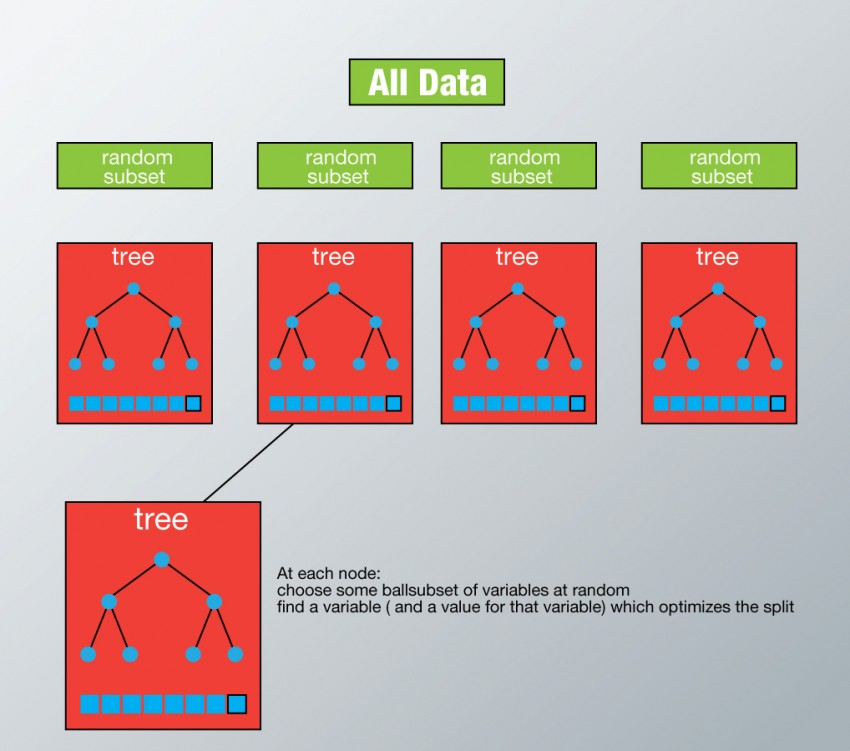

Bagging 알고리즘¶

의사결정나무(Decision Tree):

렌덤포레스트(Random Forest): 여러개의 의사결정나무(Decision Tree) 생성한 다음, 각 개별 트리의 예측값들 중 가장 많은 선택을 받은 변수들로 예측하는 알고리즘, 의사결정나무의 CLT버전

# DecisionTree

fit = DecisionTreeRegressor().fit(X_train, Y_train)

pred_tr = fit.predict(X_train)

pred_te = fit.predict(X_test)

# RandomForestRegressor

fit = RandomForestRegressor(n_estimators=100, random_state=123).fit(X_train, Y_train)

pred_tr = fit.predict(X_train)

pred_te = fit.predict(X_test)

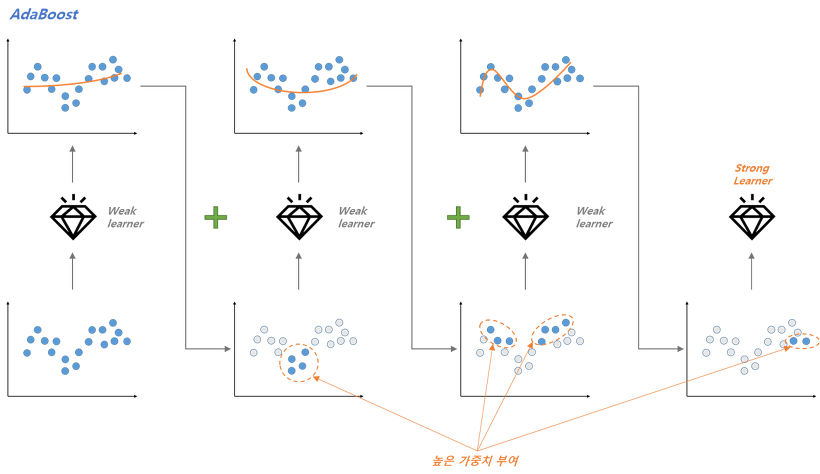

Boosting 알고리즘¶

Adaptive Boosting(AdaBoost): 학습된 모델이 과소적합(학습하기 어려운 데이터)된 학습 데이터 샘플의 가중치를 높이면서 더 잘 적합되도록 하는 방식

Gradient Boosting Machine(GBM): 아다부스트 처럼 학습단계 마다 데이터 샘플의 가중치를 업데이트 하는 것이 아니라, 학습 전단계 모델에서의 잔차(Residual)을 모델에 학습시키는 방법

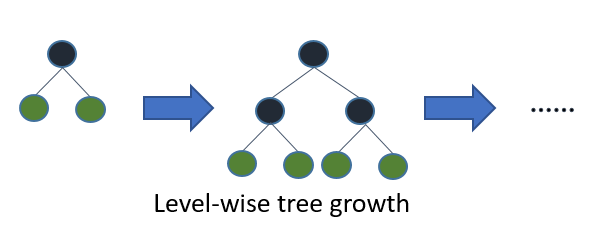

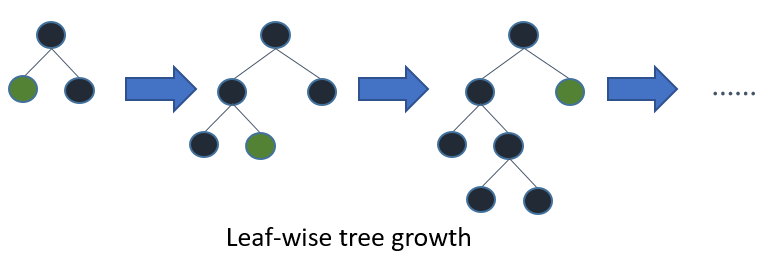

XGBoost(eXtreme Gradient Boosting): 높은 예측력으로 많은 양의 데이터를 다룰 때 사용되는 부스팅 알고리즘

LightGBM: 현존하는 부스팅 알고리즘 중 가장 빠르고 높은 예측력 제공

Algorithms |

Specification |

Others |

|---|---|---|

AdaBoost |

다수결을 통한 정답분류 및 오답에 가중치 부여 |

- |

GBM |

손실함수(검증지표)의 Gradient로 오답에 가중치 부여 |

- |

XGBoost |

GMB대비 성능향상 |

2014년 공개 |

LightGBM |

XGBoost대비 성능향상 및 자원소모 최소화 |

2016년 공개 |

# GradientBoostingRegression

fit = GradientBoostingRegressor(alpha=0.1, learning_rate=0.05, loss='huber', criterion='friedman_mse',

n_estimators=1000, random_state=123).fit(X_train, Y_train)

pred_tr = fit.predict(X_train)

pred_te = fit.predict(X_test)

# XGBoost

fit = XGBRegressor(learning_rate=0.05, n_estimators=100, random_state=123).fit(X_train, Y_train)

pred_tr = fit.predict(X_train)

pred_te = fit.predict(X_test)

# LightGMB

fit = LGBMRegressor(learning_rate=0.05, n_estimators=100, random_state=123).fit(X_train, Y_train)

pred_tr = fit.predict(X_train)

pred_te = fit.predict(X_test)

비교¶

시계열 알고리즘¶

비정상성(Non-stationary)의 정상성(Stationary) 변환¶

목적: 정상성 확보를 통해 안정성이 높아지고 예측력 향상

장점: 절약성 원칙(Principle of Parsimony)에 따라 적은 모수만으로 모델링 가능하기에 과적합 확률이 줄어듬

방법: 제곱, 루트, 로그, 차분 등

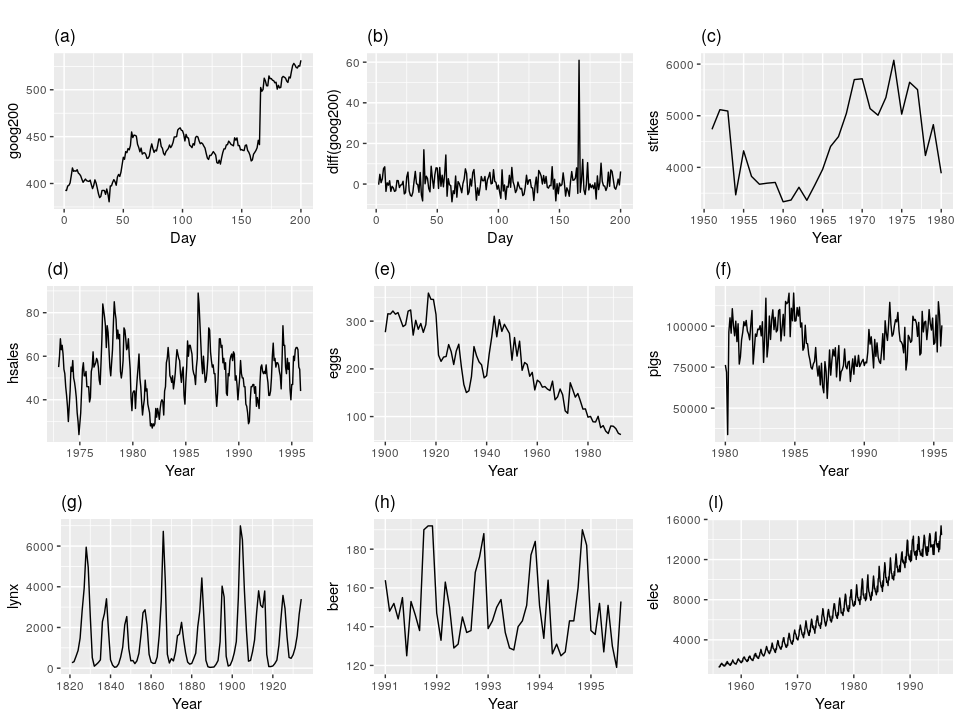

이론예시:

- Trend: a/c/e/f/i

- Seasonality: d/h/i

- Cycle: g

- Non-constant Variance: i

1) 로그변환(Logarithm Transform):

시간흐름에 비례하여 값이 커지는 경우(분산 증가)

비정상 확률 과정으로 표준편차가 자료의 크기에 비례하여 증가하거나 지수함수적으로 증가하는 경우

로그 변환한 확률 과정의 분산은 일정하기에 추세 제거로 기댓값이 0이 되면 정상 과정으로 모형화 가능

\begin{align*} \text{Distribution of Original} && \text{E}(Y_t) &= \mu_t = f(t) \ && \sqrt{\text{Var}(Y_t)} &= \mu_t \sigma \ \text{Distribution of Log-transform} && Y_t &= Y_{t-1} + Y_t - Y_{t-1} \ && \dfrac{Y_t}{Y_{t-1}} &= 1 + \dfrac{Y_t - Y_{t-1}}{Y_{t-1}} \ && log(\dfrac{Y_t}{Y_{t-1}}) &= log(1 + \dfrac{Y_t - Y_{t-1}}{Y_{t-1}}) \approx \dfrac{Y_t - Y_{t-1}}{Y_{t-1}} \ && log(Y_t) - log(Y_{t-1}) &\approx \dfrac{Y_t - Y_{t-1}}{Y_{t-1}} \ && log(Y_t) &\approx log(Y_{t-1}) + \dfrac{Y_t - Y_{t-1}}{Y_{t-1}} \ && log(Y_t) &\approx log(\mu_t) + \dfrac{Y_t - \mu_t}{\mu_t} \ && \text{E}(\log Y_t) &= \log \mu_t \ && \text{Var}(\log Y_t) &\approx \sigma^2 \ \text{Generalization of Return} && R_t &= \dfrac{Y_{t}}{Y_{t-1}} - 1 \ && \log{Y_t} - \log{Y_{t-1}} &= \log{R_t + 1} \approx R_t ;; \text{ if } \left| R_t \right| < 0.2 \ \end{align}

2) 차분(Difference): 특정 시점 또는 시점들의 데이터가 발산할 경우 시점간 차분(변화량)으로 정상성 변환 가능

계절성(Seasonality, \(S_t\)): 특정한 달/요일에 따라 기대값이 달라지는 것, 변수 더미화를 통해 추정 가능

계절성 제거: 1) 계절성 추정(\(f(t)\)) 후 계절성 제거를 통한 정상성 확보 (수학적 이해) - 확률과정의 계절변수 더미화를 통해 기댓값 함수를 알아내는 것 - 확률과정(\(Y_t\))이 추정이 가능한 결정론적 계절성함수(\(f(t)\))와 정상확률과정(\(Y^s_t\))의 합

\begin{align*}

\text{Main Equation} && Y_t &= f(t) + Y^s_t \\

\text{where} && f(t) &= \sum_{i=0}^{\infty} a_i D_i = a_0 + a_1 D_1 + a_2 D_2 + \cdots

\end{align*}

계절성 제거: 2) 차분 적용 \((1-L^d) Y_t\) 후 계절성 제거를 통한 정상성 확보 (수학적 이해)

\begin{align*}

\text{Main Equation of d=1} && Y_t &=> (1-L^1) Y_t \\

&& &= (1-Lag^1) Y_t \\

&& &= Y_t - Lag^1(Y_t) \\

&& &= Y_t - Y_{t-1} \\

\text{Main Equation of d=2} && Y_t &=> (1-L^2) Y_t \\

&& &= (1-Lag^2) Y_t \\

&& &= Y_t - Lag^2(Y_t) \\

&& &= Y_t - Y_{t-2} \\

\end{align*}

추세(Trend, \(T_t\)): 시계열이 시간에 따라 증가, 감소 또는 일정 수준을 유지하는 경우

추세 제거: 1) 추세 추정(\(f(t)\)) 후 추세 제거를 통한 정상성 확보 (수학적 이해) - 확률과정의 결정론적 기댓값 함수를 알아내는 것 - 확률과정(\(Y_t\))이 추정이 가능한 결정론적 추세함수(\(f(t)\))와 정상확률과정(\(Y^s_t\))의 합

\begin{align*}

\text{Main Equation} && Y_t &= f(t) + Y^s_t \\

\text{where} && f(t) &= \sum_{i=0}^{\infty} a_i t^i = a_0 + a_1 t + a_2 t^2 + \cdots

\end{align*}

추세 제거: 2) 차분 적용 \((1-L^1)^d Y_t\) 후 추세 제거를 통한 정상성 확보 (수학적 이해)

\begin{align*}

\text{Main Equation of d=1} && Y_t &=> (1-L^1)^1 Y_t \\

&& &= (1-Lag^1)^1 Y_t \\

&& &= Y_t - Lag^1(Y_t) \\

&& &= Y_t - Y_{t-1} \\

\text{Main Equation of d=2} && Y_t &=> (1-L^1)^2 Y_t \\

&& &= (1-2L^1+L^2) Y_t \\

&& &= (1-2Lag^1+Lag^2) Y_t \\

&& &= Y_t - 2Lag^1(Y_t) + Lag^2(Y_t) \\

&& &= Y_t - Lag^1(Y_t) - Lag^1(Y_t) + Lag^2(Y_t) \\

&& &= (Y_t - Lag^1(Y_t)) - (Lag^1(Y_t) - Lag^2(Y_t)) \\

&& &= (Y_t - L^1(Y_t)) - (L^1(Y_t) - L^2(Y_t)) \\

&& &= (Y_t - Y_{t-1}) - (Y_{t-1} - Y_{t-2}) \\

&& &= Y_t - 2Y_{t-1} + Y_{t-2} \\

\end{align*}

3) Box-Cox 변환: 정규분포가 아닌 자료를 정규분포로 변환하기 위해 사용

모수(parameter) \(\lambda\)를 가지며, 보통 여러가지 \(\lambda\) 값을 시도하여 가장 정규성을 높여주는 값을 사용

\begin{align*} y^{(\boldsymbol{\lambda})} = \begin{cases} \dfrac{y^{\lambda} - 1}{\lambda} & \text{if } \lambda \neq 0, \ \ln{y} & \text{if } \lambda = 0, \end{cases} \end{align*}

정상성 테스트 방향¶

추세와 계절성 모두 제거된 정상성 데이터 변환 필요!

ADF 정상성 확인 -> 추세 제거 확인 Measure

: ADF 검정통계량은 정상이라고 해도 데이터에 계절성이 포함되면 ACF의 비정상 Lag 존재하는 비정상데이터 가능

KPSS 정상성 확인 -> 계절성 제거 확인 Measure

: KPSS 검정통계량은 정상이라고 해도 데이터에 추세가 포함되면 ACF의 비정상 Lag 존재하는 비정상데이터 가능

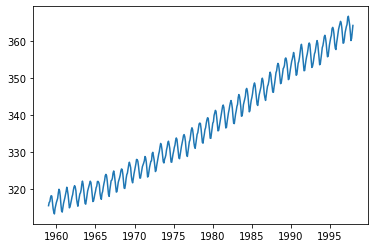

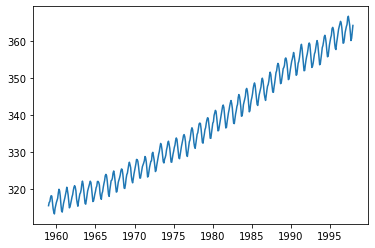

실습: 대기중 CO2농도 추세 제거¶

# 라이브러리 및 데이터 로딩

import pandas as pd

from statsmodels import datasets

import matplotlib.pyplot as plt

import statsmodels.api as sm

%reload_ext autoreload

%autoreload 2

from module import stationarity_adf_test, stationarity_kpss_test

raw_set = datasets.get_rdataset("CO2", package="datasets")

raw = raw_set.data

---------------------------------------------------------------------------

ModuleNotFoundError Traceback (most recent call last)

<ipython-input-7-c1948bb6176f> in <module>

6 get_ipython().run_line_magic('reload_ext', 'autoreload')

7 get_ipython().run_line_magic('autoreload', '2')

----> 8 from module import stationarity_adf_test, stationarity_kpss_test

9

10 raw_set = datasets.get_rdataset("CO2", package="datasets")

ModuleNotFoundError: No module named 'module'

# 데이터 확인 및 추세 추정 (선형)

display(raw.head())

plt.plot(raw.time, raw.value)

plt.show()

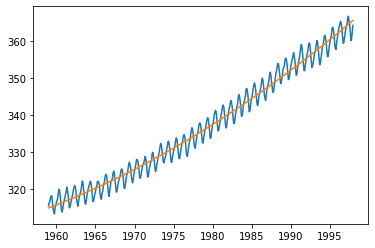

result = sm.OLS.from_formula(formula='value~time', data=raw).fit()

display(result.summary())

trend = result.params[0] + result.params[1] * raw.time

plt.plot(raw.time, raw.value, raw.time, trend)

plt.show()

| time | value | |

|---|---|---|

| 0 | 1,959.00 | 315.42 |

| 1 | 1,959.08 | 316.31 |

| 2 | 1,959.17 | 316.50 |

| 3 | 1,959.25 | 317.56 |

| 4 | 1,959.33 | 318.13 |

| Dep. Variable: | value | R-squared: | 0.969 |

|---|---|---|---|

| Model: | OLS | Adj. R-squared: | 0.969 |

| Method: | Least Squares | F-statistic: | 1.479e+04 |

| Date: | Fri, 31 Jul 2020 | Prob (F-statistic): | 0.00 |

| Time: | 22:45:19 | Log-Likelihood: | -1113.5 |

| No. Observations: | 468 | AIC: | 2231. |

| Df Residuals: | 466 | BIC: | 2239. |

| Df Model: | 1 | ||

| Covariance Type: | nonrobust |

| coef | std err | t | P>|t| | [0.025 | 0.975] | |

|---|---|---|---|---|---|---|

| Intercept | -2249.7742 | 21.268 | -105.784 | 0.000 | -2291.566 | -2207.982 |

| time | 1.3075 | 0.011 | 121.634 | 0.000 | 1.286 | 1.329 |

| Omnibus: | 15.857 | Durbin-Watson: | 0.212 |

|---|---|---|---|

| Prob(Omnibus): | 0.000 | Jarque-Bera (JB): | 7.798 |

| Skew: | 0.048 | Prob(JB): | 0.0203 |

| Kurtosis: | 2.375 | Cond. No. | 3.48e+05 |

Warnings:

[1] Standard Errors assume that the covariance matrix of the errors is correctly specified.

[2] The condition number is large, 3.48e+05. This might indicate that there are

strong multicollinearity or other numerical problems.

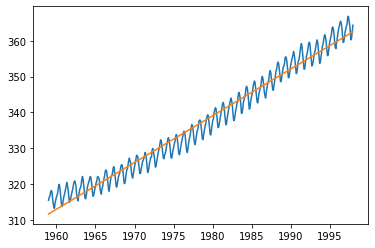

# 데이터 확인 및 추세 추정 (비선형)

display(raw.head())

plt.plot(raw.time, raw.value)

plt.show()

result = sm.OLS.from_formula(formula='value~time+I(time**2)', data=raw).fit()

display(result.summary())

trend = result.params[0] + result.params[1] * raw.time + result.params[2] * raw.time**2

plt.plot(raw.time, raw.value, raw.time, trend)

plt.show()

| time | value | |

|---|---|---|

| 0 | 1,959.00 | 315.42 |

| 1 | 1,959.08 | 316.31 |

| 2 | 1,959.17 | 316.50 |

| 3 | 1,959.25 | 317.56 |

| 4 | 1,959.33 | 318.13 |

| Dep. Variable: | value | R-squared: | 0.979 |

|---|---|---|---|

| Model: | OLS | Adj. R-squared: | 0.979 |

| Method: | Least Squares | F-statistic: | 1.075e+04 |

| Date: | Fri, 31 Jul 2020 | Prob (F-statistic): | 0.00 |

| Time: | 22:45:20 | Log-Likelihood: | -1027.8 |

| No. Observations: | 468 | AIC: | 2062. |

| Df Residuals: | 465 | BIC: | 2074. |

| Df Model: | 2 | ||

| Covariance Type: | nonrobust |

| coef | std err | t | P>|t| | [0.025 | 0.975] | |

|---|---|---|---|---|---|---|

| Intercept | 4.77e+04 | 3482.902 | 13.696 | 0.000 | 4.09e+04 | 5.45e+04 |

| time | -49.1907 | 3.521 | -13.971 | 0.000 | -56.110 | -42.272 |

| I(time ** 2) | 0.0128 | 0.001 | 14.342 | 0.000 | 0.011 | 0.015 |

| Omnibus: | 66.659 | Durbin-Watson: | 0.306 |

|---|---|---|---|

| Prob(Omnibus): | 0.000 | Jarque-Bera (JB): | 17.850 |

| Skew: | -0.116 | Prob(JB): | 0.000133 |

| Kurtosis: | 2.072 | Cond. No. | 1.35e+11 |

Warnings:

[1] Standard Errors assume that the covariance matrix of the errors is correctly specified.

[2] The condition number is large, 1.35e+11. This might indicate that there are

strong multicollinearity or other numerical problems.

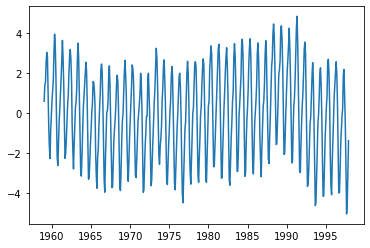

# 추세 제거 및 정상성 확인

## 방법1

plt.plot(raw.time, result.resid)

plt.show()

display(stationarity_adf_test(result.resid, []))

display(stationarity_kpss_test(result.resid, []))

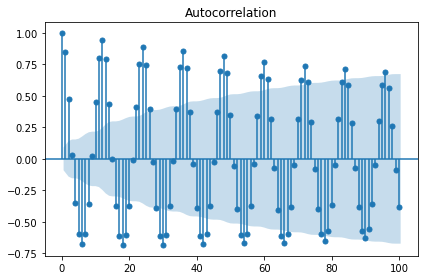

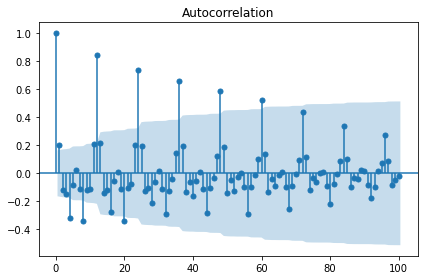

sm.graphics.tsa.plot_acf(result.resid, lags=100, use_vlines=True)

plt.tight_layout()

plt.show()

| Stationarity_adf | |

|---|---|

| Test Statistics | -2.53 |

| p-value | 0.11 |

| Used Lag | 13.00 |

| Used Observations | 454.00 |

| Critical Value(1%) | -3.44 |

| Maximum Information Criteria | 260.10 |

| Stationarity_kpss | |

|---|---|

| Test Statistics | 0.17 |

| p-value | 0.10 |

| Used Lag | 18.00 |

| Critical Value(10%) | 0.35 |

# 추세 제거 및 정상성 확인

## 방법2

plt.plot(raw.time[1:], raw.value.diff(1).dropna())

plt.show()

display(stationarity_adf_test(raw.value.diff(1).dropna(), []))

display(stationarity_kpss_test(raw.value.diff(1).dropna(), []))

sm.graphics.tsa.plot_acf(raw.value.diff(1).dropna(), lags=100, use_vlines=True)

plt.tight_layout()

plt.show()

| Stationarity_adf | |

|---|---|

| Test Statistics | -5.14 |

| p-value | 0.00 |

| Used Lag | 12.00 |

| Used Observations | 454.00 |

| Critical Value(1%) | -3.44 |

| Maximum Information Criteria | 271.87 |

| Stationarity_kpss | |

|---|---|

| Test Statistics | 0.04 |

| p-value | 0.10 |

| Used Lag | 18.00 |

| Critical Value(10%) | 0.35 |

실습: 호흡기질환 사망자수 계절성 제거¶

# 라이브러리 및 데이터 로딩

import pandas as pd

from statsmodels import datasets

import matplotlib.pyplot as plt

import statsmodels.api as sm

%reload_ext autoreload

%autoreload 2

from module import stationarity_adf_test, stationarity_kpss_test

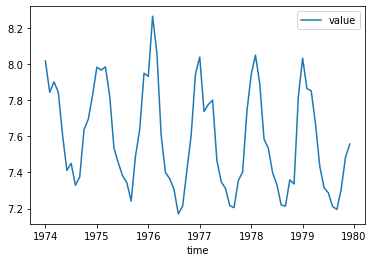

raw_set = datasets.get_rdataset("deaths", package="MASS")

raw = raw_set.data

# 시간변수 추출

raw.time = pd.date_range('1974-01-01', periods=len(raw), freq='M')

raw['month'] = raw.time.dt.month

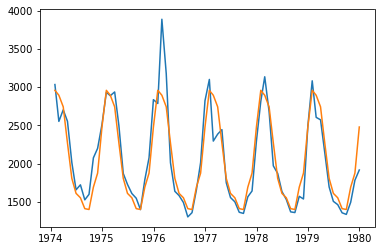

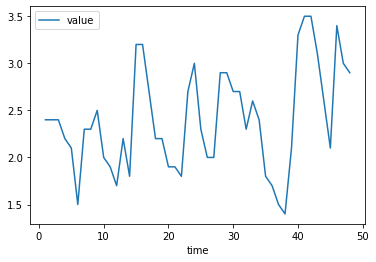

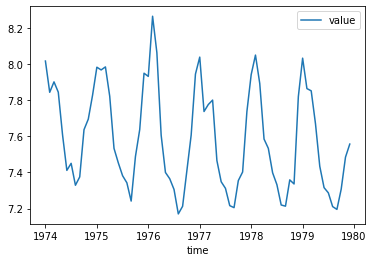

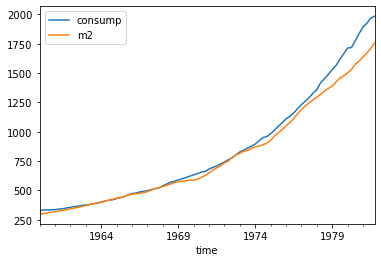

# 데이터 확인 및 추세 추정

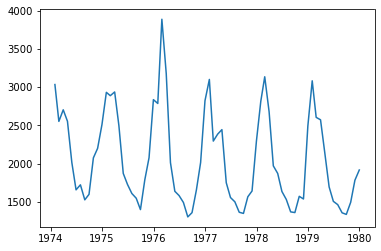

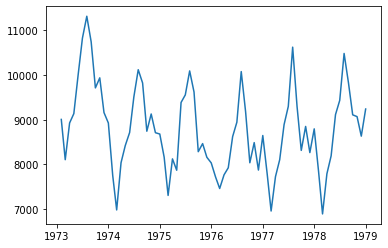

display(raw.tail())

plt.plot(raw.time, raw.value)

plt.show()

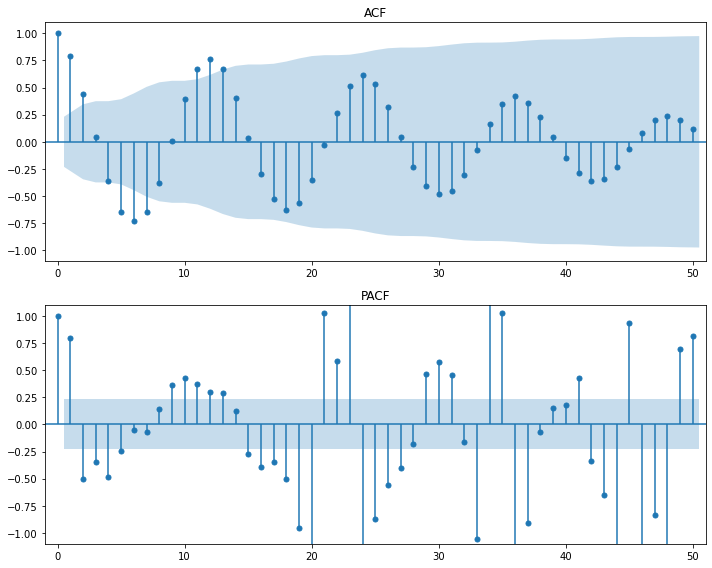

display(stationarity_adf_test(raw.value, []))

display(stationarity_kpss_test(raw.value, []))

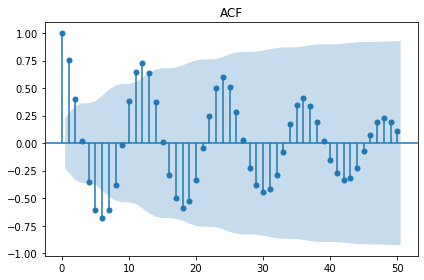

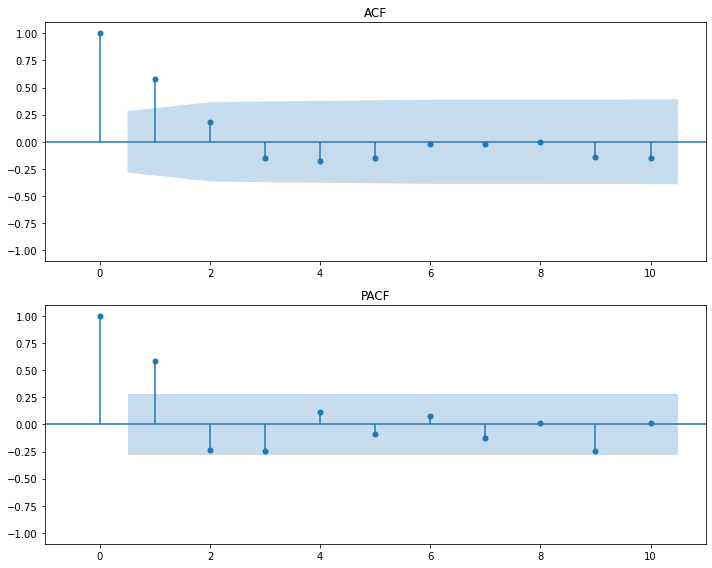

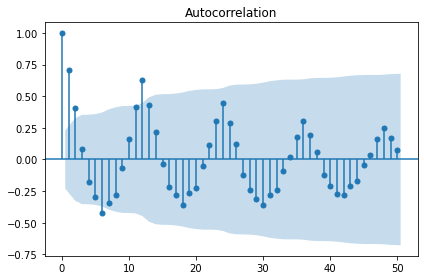

sm.graphics.tsa.plot_acf(raw.value, lags=50, use_vlines=True, title='ACF')

plt.tight_layout()

plt.show()

result = sm.OLS.from_formula(formula='value ~ C(month) - 1', data=raw).fit()

display(result.summary())

plt.plot(raw.time, raw.value, raw.time, result.fittedvalues)

plt.show()

| time | value | month | |

|---|---|---|---|

| 67 | 1979-08-31 | 1354 | 8 |

| 68 | 1979-09-30 | 1333 | 9 |

| 69 | 1979-10-31 | 1492 | 10 |

| 70 | 1979-11-30 | 1781 | 11 |

| 71 | 1979-12-31 | 1915 | 12 |

| Stationarity_adf | |

|---|---|

| Test Statistics | -0.57 |

| p-value | 0.88 |

| Used Lag | 12.00 |

| Used Observations | 59.00 |

| Critical Value(1%) | -3.55 |

| Maximum Information Criteria | 841.38 |

| Stationarity_kpss | |

|---|---|

| Test Statistics | 0.65 |

| p-value | 0.02 |

| Used Lag | 12.00 |

| Critical Value(10%) | 0.35 |

| Dep. Variable: | value | R-squared: | 0.853 |

|---|---|---|---|

| Model: | OLS | Adj. R-squared: | 0.826 |

| Method: | Least Squares | F-statistic: | 31.66 |

| Date: | Fri, 31 Jul 2020 | Prob (F-statistic): | 6.55e-21 |

| Time: | 22:45:23 | Log-Likelihood: | -494.38 |

| No. Observations: | 72 | AIC: | 1013. |

| Df Residuals: | 60 | BIC: | 1040. |

| Df Model: | 11 | ||

| Covariance Type: | nonrobust |

| coef | std err | t | P>|t| | [0.025 | 0.975] | |

|---|---|---|---|---|---|---|

| C(month)[1] | 2959.3333 | 103.831 | 28.502 | 0.000 | 2751.641 | 3167.025 |

| C(month)[2] | 2894.6667 | 103.831 | 27.879 | 0.000 | 2686.975 | 3102.359 |

| C(month)[3] | 2743.0000 | 103.831 | 26.418 | 0.000 | 2535.308 | 2950.692 |

| C(month)[4] | 2269.6667 | 103.831 | 21.859 | 0.000 | 2061.975 | 2477.359 |

| C(month)[5] | 1805.1667 | 103.831 | 17.386 | 0.000 | 1597.475 | 2012.859 |

| C(month)[6] | 1608.6667 | 103.831 | 15.493 | 0.000 | 1400.975 | 1816.359 |

| C(month)[7] | 1550.8333 | 103.831 | 14.936 | 0.000 | 1343.141 | 1758.525 |

| C(month)[8] | 1408.3333 | 103.831 | 13.564 | 0.000 | 1200.641 | 1616.025 |

| C(month)[9] | 1397.3333 | 103.831 | 13.458 | 0.000 | 1189.641 | 1605.025 |

| C(month)[10] | 1690.0000 | 103.831 | 16.277 | 0.000 | 1482.308 | 1897.692 |

| C(month)[11] | 1874.0000 | 103.831 | 18.049 | 0.000 | 1666.308 | 2081.692 |

| C(month)[12] | 2478.5000 | 103.831 | 23.871 | 0.000 | 2270.808 | 2686.192 |

| Omnibus: | 19.630 | Durbin-Watson: | 1.374 |

|---|---|---|---|

| Prob(Omnibus): | 0.000 | Jarque-Bera (JB): | 49.630 |

| Skew: | 0.787 | Prob(JB): | 1.67e-11 |

| Kurtosis: | 6.750 | Cond. No. | 1.00 |

Warnings:

[1] Standard Errors assume that the covariance matrix of the errors is correctly specified.

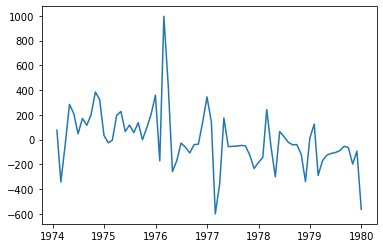

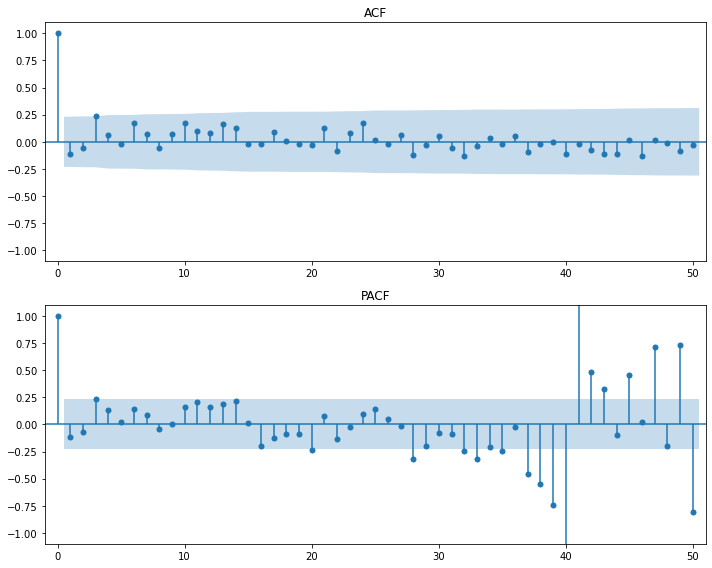

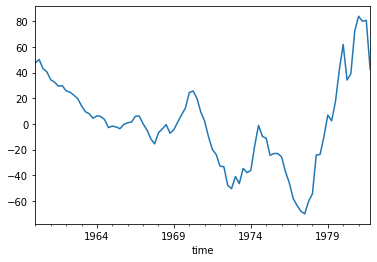

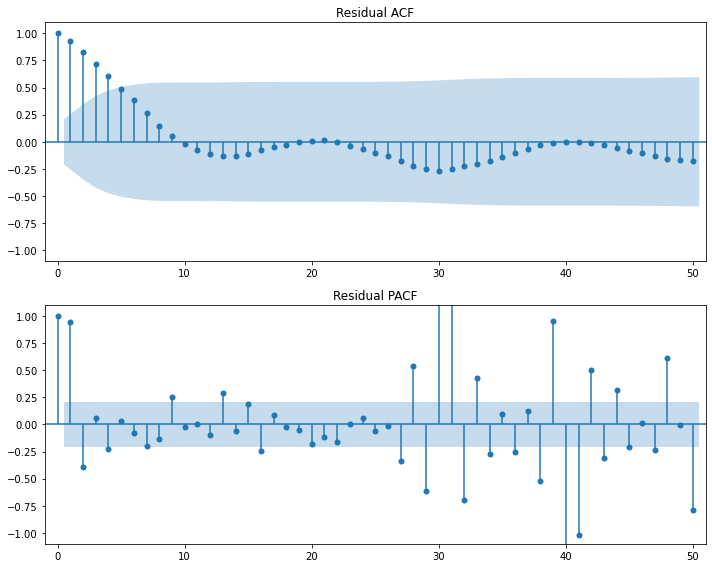

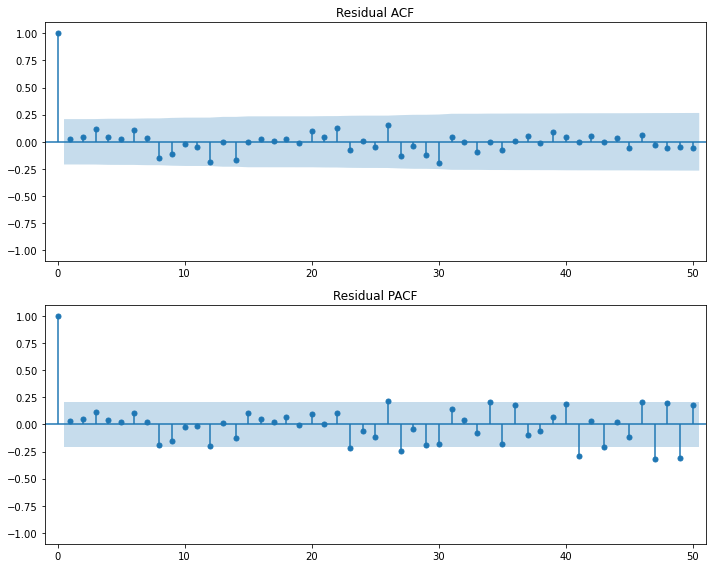

# 추세 제거 및 정상성 확인

## 방법1

plt.plot(raw.time, result.resid)

plt.show()

display(stationarity_adf_test(result.resid, []))

display(stationarity_kpss_test(result.resid, []))

sm.graphics.tsa.plot_acf(result.resid, lags=50, use_vlines=True)

plt.tight_layout()

plt.show()

| Stationarity_adf | |

|---|---|

| Test Statistics | -5.84 |

| p-value | 0.00 |

| Used Lag | 0.00 |

| Used Observations | 71.00 |

| Critical Value(1%) | -3.53 |

| Maximum Information Criteria | 812.36 |

| Stationarity_kpss | |

|---|---|

| Test Statistics | 0.54 |

| p-value | 0.03 |

| Used Lag | 12.00 |

| Critical Value(10%) | 0.35 |

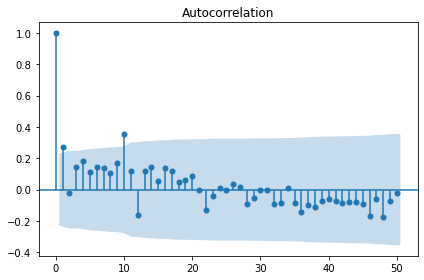

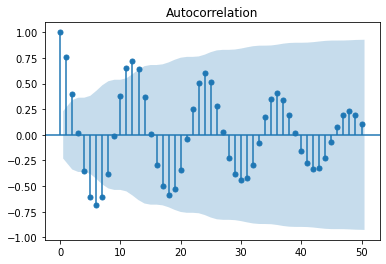

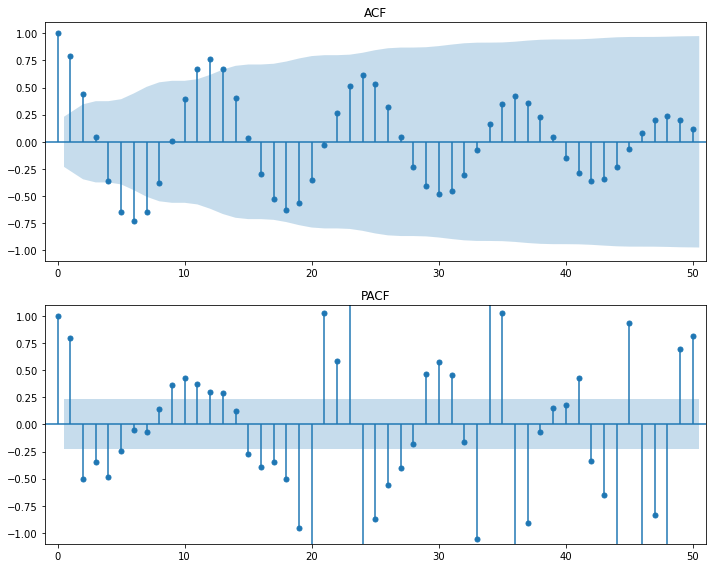

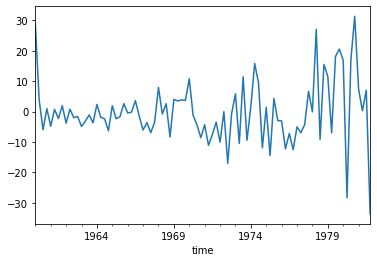

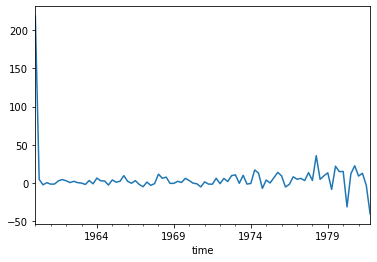

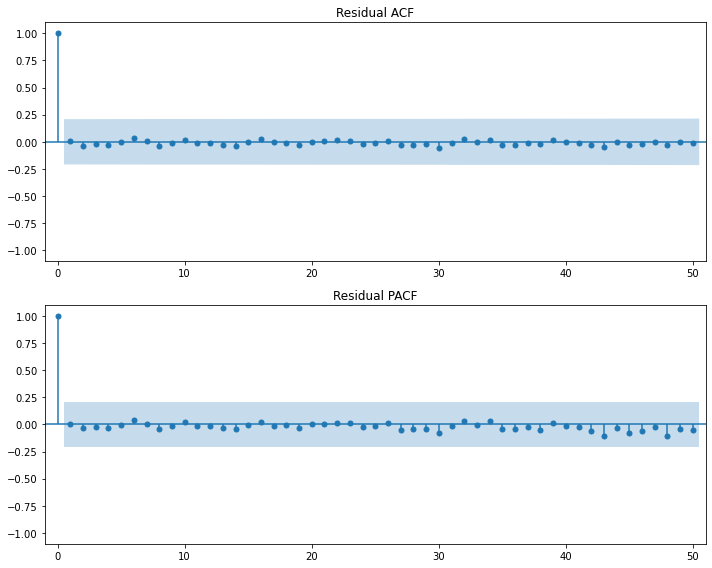

# 계절성 제거 및 정상성 확인

## 방법2

sm.graphics.tsa.plot_acf(raw.value, lags=50, use_vlines=True)

plt.show()

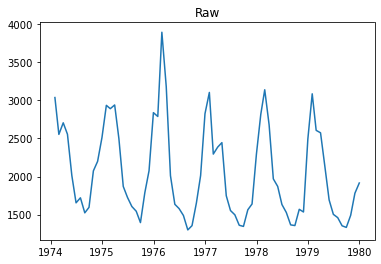

plt.plot(raw.time, raw.value)

plt.title('Raw')

plt.show()

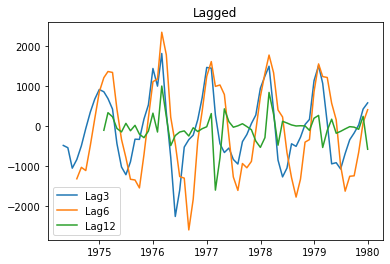

seasonal_lag = 3

plt.plot(raw.time[seasonal_lag:], raw.value.diff(seasonal_lag).dropna(), label='Lag{}'.format(seasonal_lag))

seasonal_lag = 6

plt.plot(raw.time[seasonal_lag:], raw.value.diff(seasonal_lag).dropna(), label='Lag{}'.format(seasonal_lag))

seasonal_lag = 12

plt.plot(raw.time[seasonal_lag:], raw.value.diff(seasonal_lag).dropna(), label='Lag{}'.format(seasonal_lag))

plt.title('Lagged')

plt.legend()

plt.show()

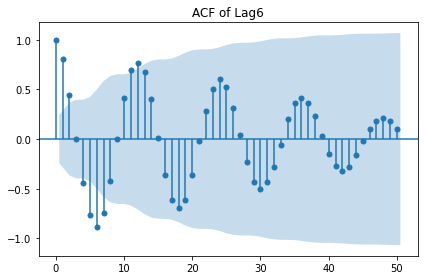

seasonal_lag = 6

display(stationarity_adf_test(raw.value.diff(seasonal_lag).dropna(), []))

display(stationarity_kpss_test(raw.value.diff(seasonal_lag).dropna(), []))

sm.graphics.tsa.plot_acf(raw.value.diff(seasonal_lag).dropna(), lags=50,

use_vlines=True, title='ACF of Lag{}'.format(seasonal_lag))

plt.tight_layout()

plt.show()

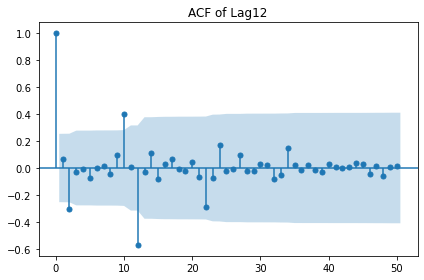

seasonal_lag = 12

display(stationarity_adf_test(raw.value.diff(seasonal_lag).dropna(), []))

display(stationarity_kpss_test(raw.value.diff(seasonal_lag).dropna(), []))

sm.graphics.tsa.plot_acf(raw.value.diff(seasonal_lag).dropna(), lags=50,

use_vlines=True, title='ACF of Lag{}'.format(seasonal_lag))

plt.tight_layout()

plt.show()

| Stationarity_adf | |

|---|---|

| Test Statistics | -4.30 |

| p-value | 0.00 |

| Used Lag | 11.00 |

| Used Observations | 54.00 |

| Critical Value(1%) | -3.56 |

| Maximum Information Criteria | 786.67 |

| Stationarity_kpss | |

|---|---|

| Test Statistics | 0.35 |

| p-value | 0.10 |

| Used Lag | 11.00 |

| Critical Value(10%) | 0.35 |

| Stationarity_adf | |

|---|---|

| Test Statistics | -2.14 |

| p-value | 0.23 |

| Used Lag | 11.00 |

| Used Observations | 48.00 |

| Critical Value(1%) | -3.57 |

| Maximum Information Criteria | 703.72 |

| Stationarity_kpss | |

|---|---|

| Test Statistics | 0.09 |

| p-value | 0.10 |

| Used Lag | 11.00 |

| Critical Value(10%) | 0.35 |

실습: 랜덤워크의 정상성 변환¶

# 라이브러리 호출

import pandas as pd

import numpy as np

import statsmodels.api as sm

from random import seed, random

import matplotlib.pyplot as plt

%reload_ext autoreload

%autoreload 2

from module import stationarity_adf_test, stationarity_kpss_test

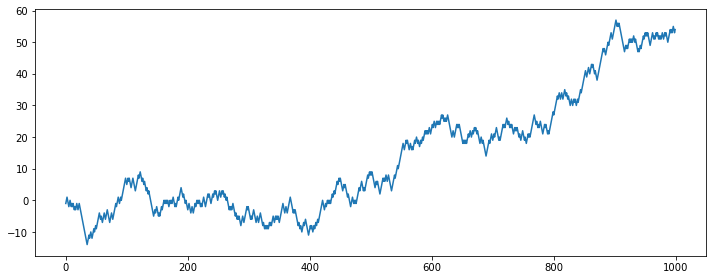

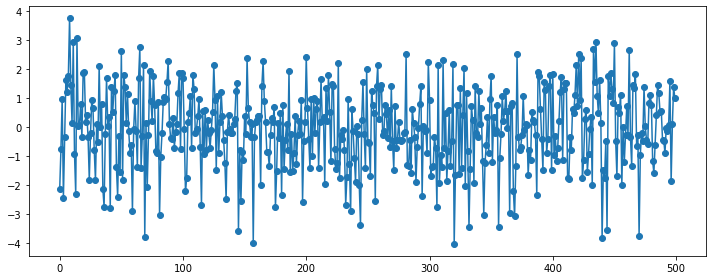

# 랜덤워크 데이터 생성

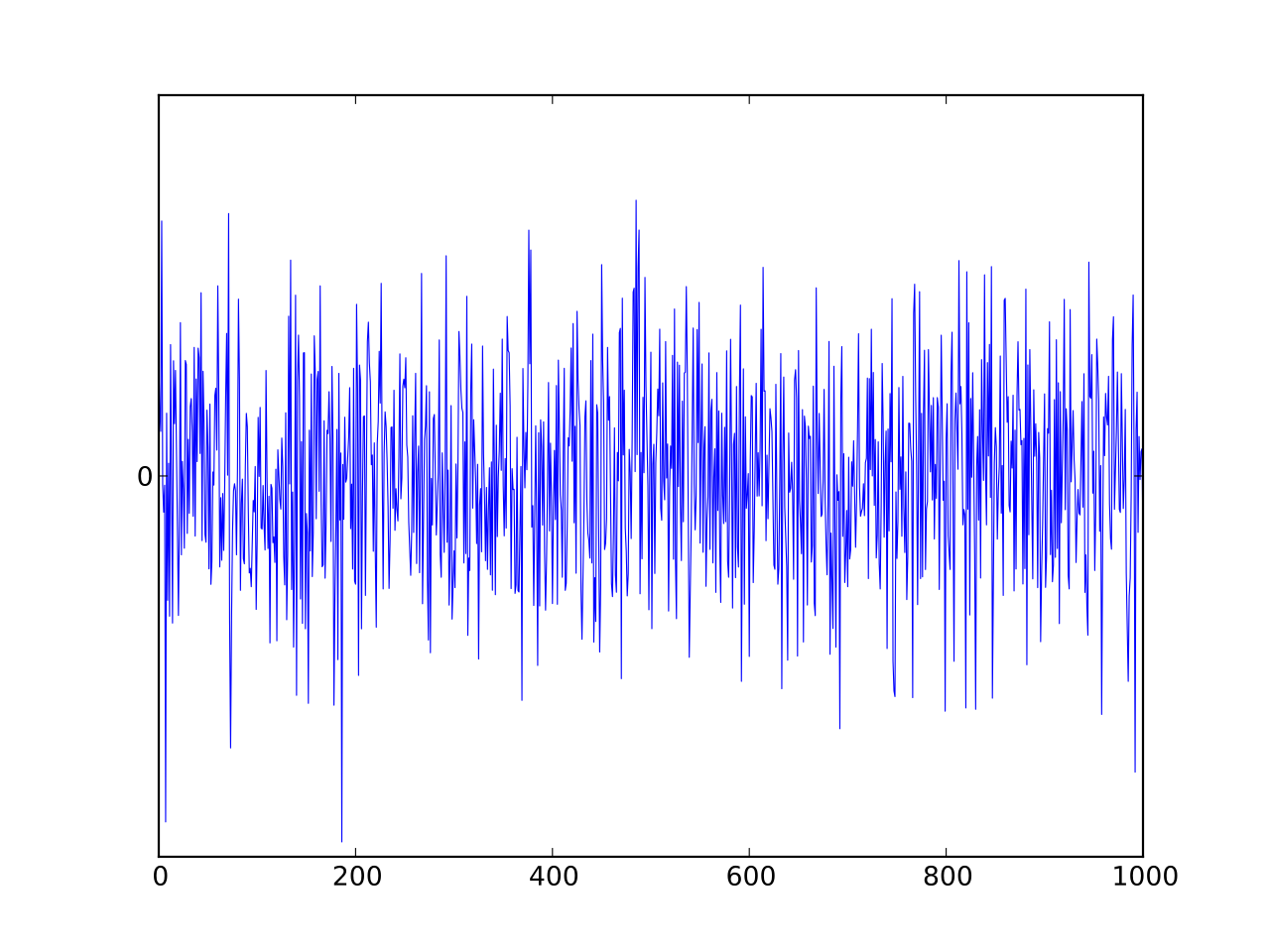

plt.figure(figsize=(10, 4))

seed(1)

random_walk = [-1 if random() < 0.5 else 1]

for i in range(1, 1000):

movement = -1 if random() < 0.5 else 1

value = random_walk[i-1] + movement

random_walk.append(value)

plt.plot(random_walk)

plt.tight_layout()

plt.show()

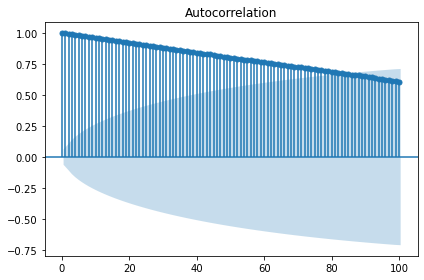

# 차분 전 랜덤워크 정상성 테스트

display('Before a difference:')

display(stationarity_adf_test(random_walk, []))

display(stationarity_kpss_test(random_walk, []))

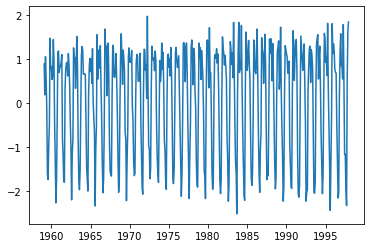

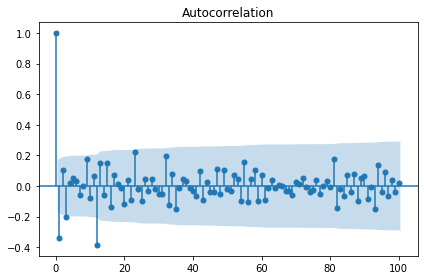

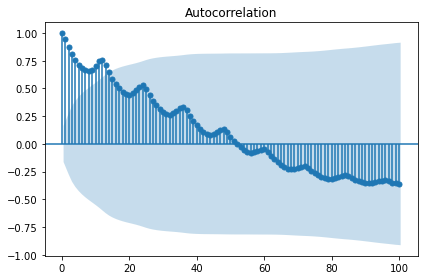

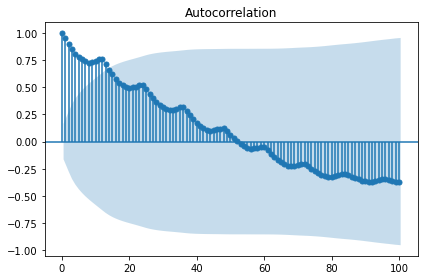

sm.graphics.tsa.plot_acf(random_walk, lags=100, use_vlines=True)

plt.tight_layout()

plt.show()

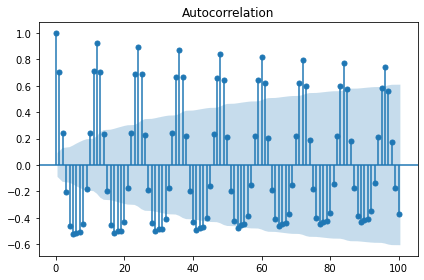

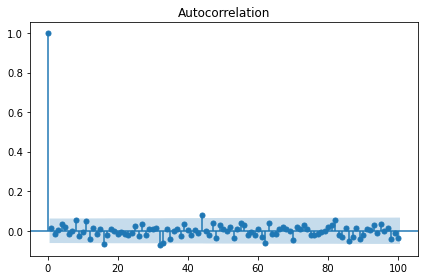

# 차분 후 랜덤워크 정상성 테스트

display('After a difference:')

display(stationarity_adf_test(pd.Series(random_walk).diff(1).dropna(), []))

display(stationarity_kpss_test(pd.Series(random_walk).diff(1).dropna(), []))

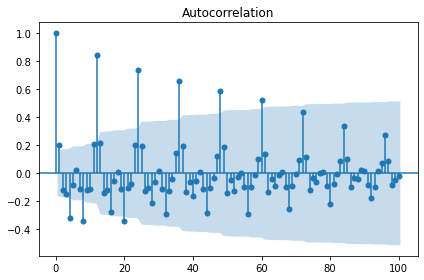

sm.graphics.tsa.plot_acf(pd.Series(random_walk).diff(1).dropna(), lags=100, use_vlines=True)

plt.tight_layout()

plt.show()

'Before a difference:'

| Stationarity_adf | |

|---|---|

| Test Statistics | 0.34 |

| p-value | 0.98 |

| Used Lag | 0.00 |

| Used Observations | 999.00 |

| Critical Value(1%) | -3.44 |

| Maximum Information Criteria | 2,773.39 |

| Stationarity_kpss | |

|---|---|

| Test Statistics | 3.75 |

| p-value | 0.01 |

| Used Lag | 22.00 |

| Critical Value(10%) | 0.35 |

'After a difference:'

| Stationarity_adf | |

|---|---|

| Test Statistics | -31.08 |

| p-value | 0.00 |

| Used Lag | 0.00 |

| Used Observations | 998.00 |

| Critical Value(1%) | -3.44 |

| Maximum Information Criteria | 2,770.18 |

| Stationarity_kpss | |

|---|---|

| Test Statistics | 0.22 |

| p-value | 0.10 |

| Used Lag | 22.00 |

| Critical Value(10%) | 0.35 |

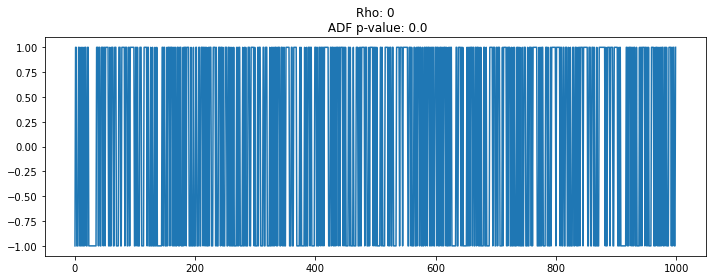

# 랜덤워크 데이터 생성 및 통계량 Test

plt.figure(figsize=(10, 4))

seed(1)

rho = 0

random_walk = [-1 if random() < 0.5 else 1]

for i in range(1, 1000):

movement = -1 if random() < 0.5 else 1

value = rho * random_walk[i-1] + movement

random_walk.append(value)

plt.plot(random_walk)

plt.title('Rho: {}\n ADF p-value: {}'.format(rho, np.ravel(stationarity_adf_test(random_walk, []))[1]))

plt.tight_layout()

plt.show()

# rho 값을 변화시키면서 언제 비정상이 되는지 파악?

# 정상성의 계수 범위 추론 가능!

실습: 항공사 승객수요 스케일 변환(Log / Box-Cox)¶

# 라이브러리 호출

import numpy as np

import matplotlib.pyplot as plt

import statsmodels.api as sm

import scipy as sp

%reload_ext autoreload

%autoreload 2

from module import stationarity_adf_test, stationarity_kpss_test

# 데이터 준비

data = sm.datasets.get_rdataset("AirPassengers")

raw = data.data.copy()

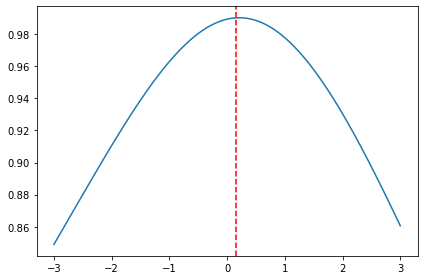

# Box-Cox 변환 모수 추정

# 정규분포의 특정 범위(x)에서 lambda를 바꿔가며 정규성(measure:y)이 가장 높은 lambda(l_opt)를 선정

x, y = sp.stats.boxcox_normplot(raw.value, la=-3, lb=3)

y_transfer, l_opt = sp.stats.boxcox(raw.value)

print('Optimal Lambda: ', l_opt)

plt.plot(x, y)

plt.axvline(x=l_opt, color='r', ls="--")

plt.tight_layout()

plt.show()

Optimal Lambda: 0.14802265137037945

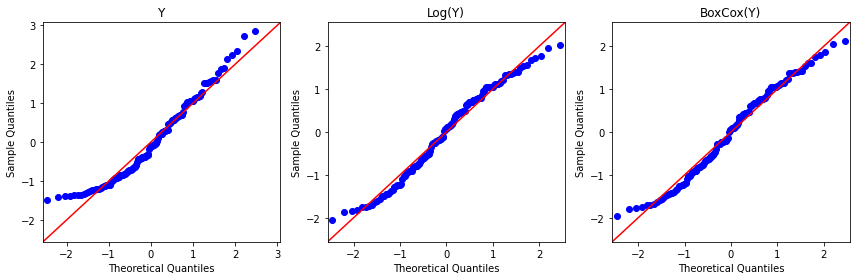

plt.figure(figsize=(12,4))

sm.qqplot(raw.value, fit=True, line='45', ax=plt.subplot(131))

plt.title('Y')

sm.qqplot(np.log(raw.value), fit=True, line='45', ax=plt.subplot(132))

plt.title('Log(Y)')

sm.qqplot(y_transfer, fit=True, line='45', ax=plt.subplot(133))

plt.title('BoxCox(Y)')

plt.tight_layout()

plt.show()

실습: 항공사 승객수요 정상성 변환¶

# 라이브러리 호출

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

import statsmodels.api as sm

%reload_ext autoreload

%autoreload 2

from module import stationarity_adf_test, stationarity_kpss_test

# 데이터 준비

data = sm.datasets.get_rdataset("AirPassengers")

raw = data.data.copy()

# 데이터 전처리

## 시간 인덱싱

if 'time' in raw.columns:

raw.index = pd.date_range(start='1/1/1949', periods=len(raw['time']), freq='M')

del raw['time']

## 정상성 확보

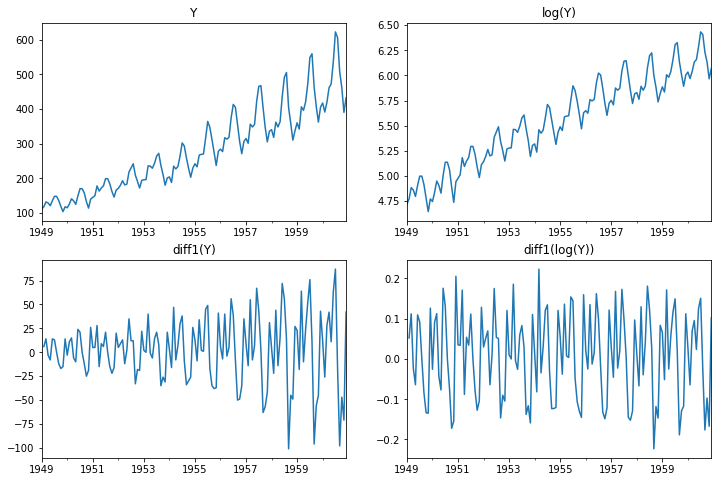

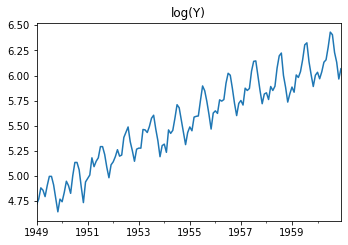

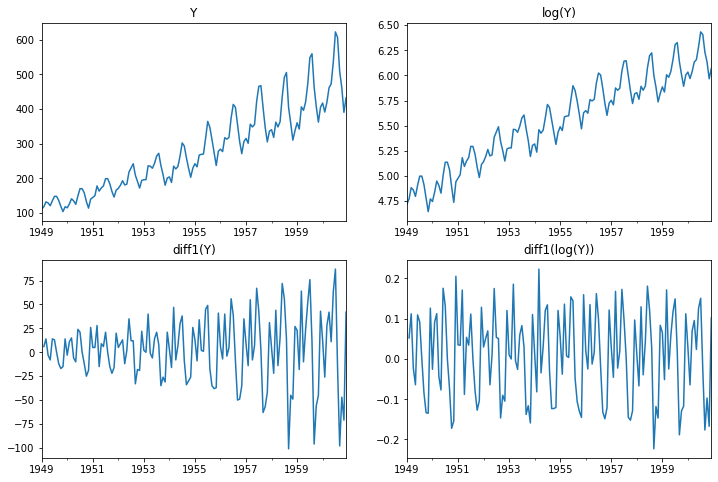

plt.figure(figsize=(12,8))

raw.plot(ax=plt.subplot(221), title='Y', legend=False)

np.log(raw).plot(ax=plt.subplot(222), title='log(Y)', legend=False)

raw.diff(1).plot(ax=plt.subplot(223), title='diff1(Y)', legend=False)

np.log(raw).diff(1).plot(ax=plt.subplot(224), title='diff1(log(Y))', legend=False)

plt.show()

## 정상성 테스트

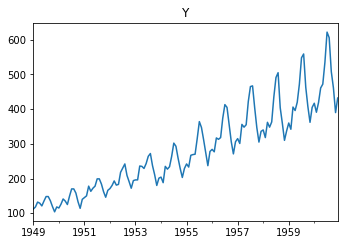

### 미변환

display('Non-transfer:')

plt.figure(figsize=(12,8))

raw.plot(ax=plt.subplot(222), title='Y', legend=False)

plt.show()

candidate_none = raw.copy()

display(stationarity_adf_test(candidate_none.values.flatten(), []))

display(stationarity_kpss_test(candidate_none.values.flatten(), []))

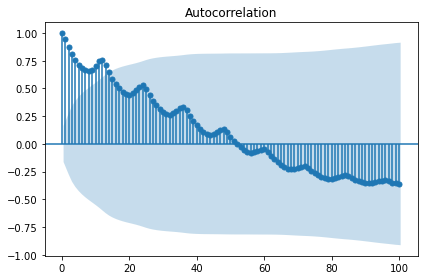

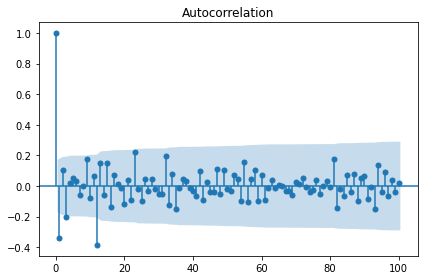

sm.graphics.tsa.plot_acf(candidate_none, lags=100, use_vlines=True)

plt.tight_layout()

plt.show()

### 로그 변환

display('Log transfer:')

plt.figure(figsize=(12,8))

np.log(raw).plot(ax=plt.subplot(222), title='log(Y)', legend=False)

plt.show()

candidate_trend = np.log(raw).copy()

display(stationarity_adf_test(candidate_trend.values.flatten(), []))

display(stationarity_kpss_test(candidate_trend.values.flatten(), []))

sm.graphics.tsa.plot_acf(candidate_trend, lags=100, use_vlines=True)

plt.tight_layout()

plt.show()

trend_diff_order_initial = 0

result = stationarity_adf_test(candidate_trend.values.flatten(), []).T

if result['p-value'].values.flatten() < 0.1:

trend_diff_order = trend_diff_order_initial

else:

trend_diff_order = trend_diff_order_initial + 1

print('Trend Difference: ', trend_diff_order)

### 로그+추세차분 변환

display('Log and trend diffrence transfer:')

plt.figure(figsize=(12,8))

np.log(raw).diff(trend_diff_order).plot(ax=plt.subplot(224), title='diff1(log(Y))', legend=False)

plt.show()

candidate_seasonal = candidate_trend.diff(trend_diff_order).dropna().copy()

display(stationarity_adf_test(candidate_seasonal.values.flatten(), []))

display(stationarity_kpss_test(candidate_seasonal.values.flatten(), []))

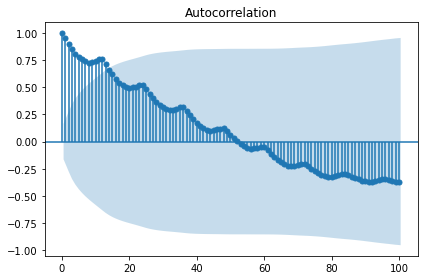

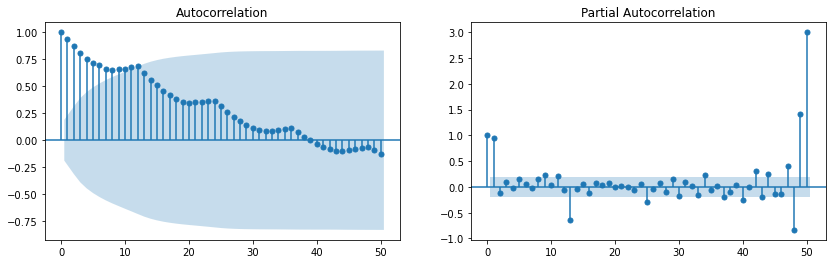

sm.graphics.tsa.plot_acf(candidate_seasonal, lags=100, use_vlines=True)

plt.tight_layout()

plt.show()

seasonal_diff_order = sm.tsa.acf(candidate_seasonal)[1:].argmax() + 1

print('Seasonal Difference: ', seasonal_diff_order)

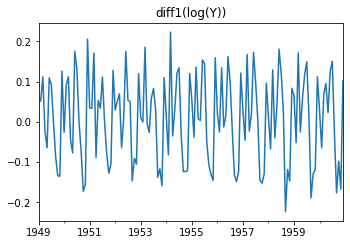

### 로그+추세차분+계절차분 변환

display('Log and trend+seasonal diffrence transfer:')

candidate_final = candidate_seasonal.diff(seasonal_diff_order).dropna().copy()

display(stationarity_adf_test(candidate_final.values.flatten(), []))

display(stationarity_kpss_test(candidate_final.values.flatten(), []))

sm.graphics.tsa.plot_acf(candidate_final, lags=100, use_vlines=True)

plt.tight_layout()

plt.show()

'Non-transfer:'

| Stationarity_adf | |

|---|---|

| Test Statistics | 0.82 |

| p-value | 0.99 |

| Used Lag | 13.00 |

| Used Observations | 130.00 |

| Critical Value(1%) | -3.48 |

| Maximum Information Criteria | 996.69 |

| Stationarity_kpss | |

|---|---|

| Test Statistics | 1.05 |

| p-value | 0.01 |

| Used Lag | 14.00 |

| Critical Value(10%) | 0.35 |

'Log transfer:'

| Stationarity_adf | |

|---|---|

| Test Statistics | -1.72 |

| p-value | 0.42 |

| Used Lag | 13.00 |

| Used Observations | 130.00 |

| Critical Value(1%) | -3.48 |

| Maximum Information Criteria | -445.40 |

| Stationarity_kpss | |

|---|---|

| Test Statistics | 1.05 |

| p-value | 0.01 |

| Used Lag | 14.00 |

| Critical Value(10%) | 0.35 |

Trend Difference: 1

'Log and trend diffrence transfer:'

| Stationarity_adf | |

|---|---|

| Test Statistics | -2.72 |

| p-value | 0.07 |

| Used Lag | 14.00 |

| Used Observations | 128.00 |

| Critical Value(1%) | -3.48 |

| Maximum Information Criteria | -440.36 |

| Stationarity_kpss | |

|---|---|

| Test Statistics | 0.10 |

| p-value | 0.10 |

| Used Lag | 14.00 |

| Critical Value(10%) | 0.35 |

Seasonal Difference: 12

'Log and trend+seasonal diffrence transfer:'

| Stationarity_adf | |

|---|---|

| Test Statistics | -4.44 |

| p-value | 0.00 |

| Used Lag | 12.00 |

| Used Observations | 118.00 |

| Critical Value(1%) | -3.49 |

| Maximum Information Criteria | -415.56 |

| Stationarity_kpss | |

|---|---|

| Test Statistics | 0.11 |

| p-value | 0.10 |

| Used Lag | 13.00 |

| Critical Value(10%) | 0.35 |

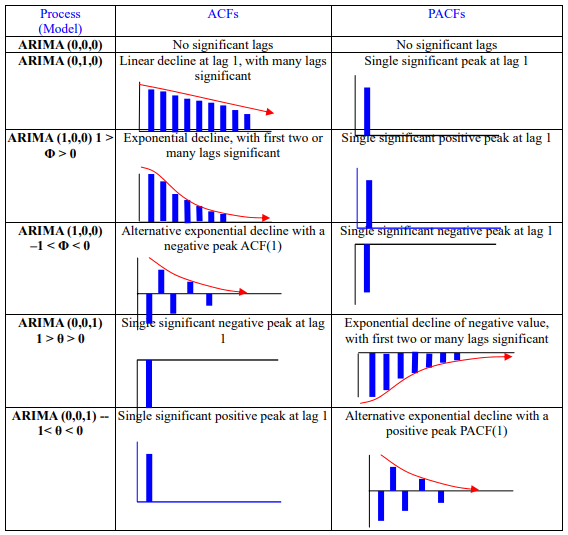

일반 선형확률과정(General Linear Process)¶

“시계열 데이터가 가우시안 백색잡음의 현재값과 과거값의 선형조합”

\begin{align*} Y_t = \epsilon_t + \psi_1\epsilon_{t-1} + \psi_2\epsilon_{t-2} + \cdots \ \end{align*} \begin{align*} where~\epsilon_i \sim i.i.d.~WN(0, \sigma_{\epsilon_i}^2)~and~\displaystyle \sum_{i=1}^{\infty}\psi_i^2 < \infty \end{align*}

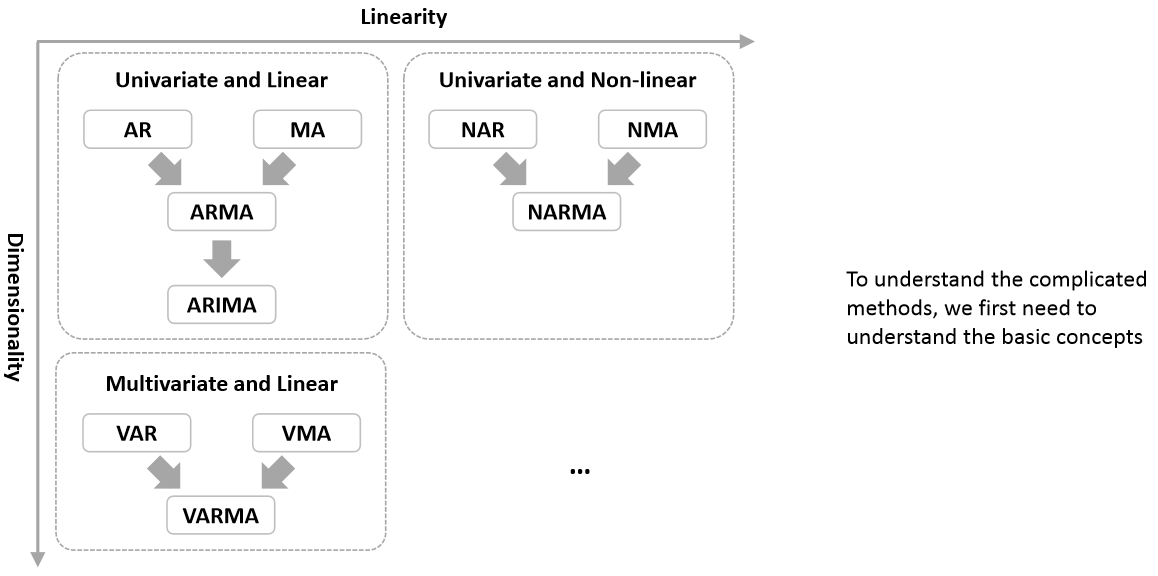

세부 알고리즘:

WN(White Noise)

MA(Moving Average)

AR(Auto-Regressive)

ARMA(Auto-Regressive Moving Average)

ARIMA(Auto-Regressive Integrated Moving Average)

SARIMA(Seasonal ARIMA)

WN(White Noise)¶

1) 잔차들은 정규분포이고, (unbiased) 평균 0과 일정한 분산을 가져야 함:

\begin{align*} {\epsilon_t : t = \dots, -2, -1, 0, 1, 2, \dots} \sim N(0,\sigma^2_{\epsilon_t}) \ \end{align*} \begin{align*} where~~ \epsilon_t \sim i.i.d(independent~and~identically~distributed) \ \end{align*} \begin{align*} \epsilon_t = Y_t - \hat{Y_t}, ;; E(\epsilon_t) = 0, ;; Var(\epsilon_t) = \sigma^2_{\epsilon_t} \ \end{align*} \begin{align*} Cov(\epsilon_s, \epsilon_k) = 0~for~different~times!(s \ne k) \end{align*}

2) 잔차들이 시간의 흐름에 따라 상관성이 없어야 함:

자기상관함수(Autocorrelation Fundtion(ACF))를 통해 \(Autocorrelation~=~0\)인지 확인

공분산(Covariance):

$Cov(Y_s, Y_k)$ = $E[(Y_s-E(Y_s))$$(Y_k-E(Y_k))]$ = $\gamma_{s,k}$ - 자기상관함수(Autocorrelation Function):$Corr(Y_s, Y_k)$ = $\dfrac{Cov(Y_s, Y_k)}{\sqrt{Var(Y_s)Var(Y_k)}}$ = $\dfrac{\gamma_{s,k}}{\sqrt{\gamma_s \gamma_k}}$ - 편자기상관함수(Partial Autocorrelation Function): $s$와 $k$사이의 상관성을 제거한 자기상관함수$Corr[(Y_s-\hat{Y}_s, Y_{s-t}-\hat{Y}_{s-t})]$ for $1 특성요약:

강정상 과정(Stictly Stationary Process)

강정상 예시로 시계열분석 기본알고리즘 중 가장 중요함

시차(lag)가 0일 경우, 자기공분산은 확률 분포의 분산이 되고 시차가 0이 아닌 경우, 자기공분산은 0.

\begin{align*} \gamma_i = \begin{cases} \text{Var}(\epsilon_t) & ;; \text{ for } i = 0 \

0 & ;; \text{ for } i \neq 0 \end{cases} \end{align*}시차(lag)가 0일 경우, 자기상관계수는 1이 되고 시차가 0이 아닌 경우, 자기상관계수는 0. \begin{align*} \rho_i = \begin{cases} 1 & ;; \text{ for } i = 0 \

0 & ;; \text{ for } i \neq 0 \end{cases} \end{align*}

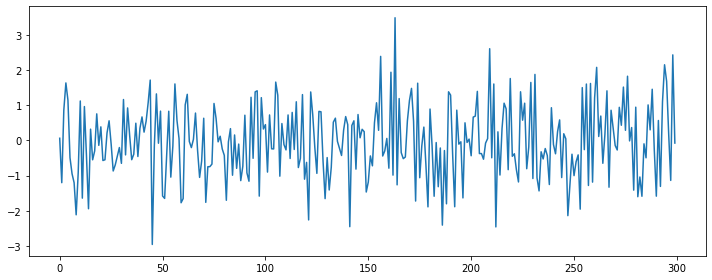

1) 예시: 가우시안 백색잡음

from scipy import stats

import matplotlib.pyplot as plt

plt.figure(figsize=(10, 4))

plt.plot(stats.norm.rvs(size=300))

plt.tight_layout()

plt.show()

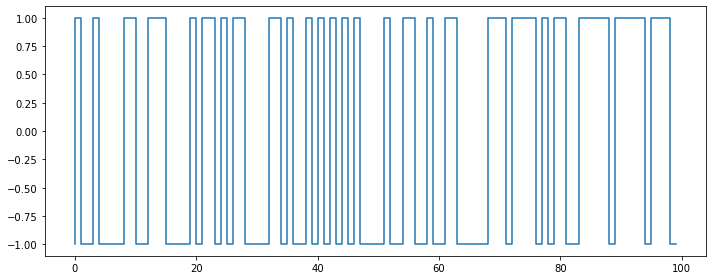

2) 예시: 베르누이 백색잡음

백색잡음의 기반 확률분포가 반드시 정규분포일 필요는 없음

import numpy as np

from scipy import stats

import matplotlib.pyplot as plt

plt.figure(figsize=(10, 4))

samples = stats.bernoulli.rvs(0.5, size=100) * 2 - 1

plt.step(np.arange(len(samples)), samples)

plt.ylim(-1.1, 1.1)

plt.tight_layout()

plt.show()

MA(Moving Average)¶

“\(MA(q)\): 알고리즘의 차수(\(q\))가 유한한 가우시안 백색잡음과정의 선형조합”

Exponential Smoothing 내 Moving Average Smoothing은 과거의 Trend-Cycle을 추정하기 위함이고, MA는 미래 값을 예측하기 위함

\begin{align*} Y_t &= \epsilon_t + \theta_1\epsilon_{t-1} + \theta_2\epsilon_{t-2} + \cdots + \theta_q\epsilon_{t-q} \end{align*} \begin{align*} where~\epsilon_i \sim i.i.d.~WN(0, \sigma_{\epsilon_i}^2)~and~\displaystyle \sum_{i=1}^{\infty}\theta_i^2 < \infty \end{align*} \begin{align*} Y_t &= \epsilon_t + \theta_1\epsilon_{t-1} + \theta_2\epsilon_{t-2} + \cdots + \theta_q\epsilon_{t-q} \ &= \epsilon_t + \theta_1L\epsilon_t + \theta_2L^2\epsilon_t + \cdots + \theta_qL^q\epsilon_t \ &= (1 + \theta_1L + \theta_2L^2 + \cdots + \theta_qL^q)\epsilon_t \ &= \theta(L)\epsilon_t \ \end{align*} \begin{align*} where~\epsilon_{t-1} = L\epsilon_t~and~\epsilon_{t-2} = L^2\epsilon_t$ \end{align*}

MA(1):

\begin{align*} \text{Main Equation} && Y_t &= \epsilon_t + \theta_1\epsilon_{t-1} \ \text{Expectation} && E(Y_t) &= E(\epsilon_t + \theta_1\epsilon_{t-1}) = E(\epsilon_t) + \theta_1E(\epsilon_{t-1}) = 0 \ \text{Variance} && Var(Y_t) &= E[(\epsilon_t + \theta_1\epsilon_{t-1})^2] \ && &= E(\epsilon_t^2) + 2\theta_1E(\epsilon_{t}\epsilon_{t-1}) + \theta_1^2E(\epsilon_{t-1}^2) \ && &= \sigma_{\epsilon_i}^2 + 2 \theta_1 \cdot 0 + \theta_1^2 \sigma_{\epsilon_i}^2 \ && &= \sigma_{\epsilon_i}^2 + \theta_1^2\sigma_{\epsilon_i}^2 \ \text{Covariance} && Cov(Y_t, Y_{t-1}) = \gamma_1 &= \text{E} \left[ (\epsilon_t + \theta_1 \epsilon_{t-1})(\epsilon_{t-1} + \theta_1 \epsilon_{t-2}) \right] \ && &= E (\epsilon_t \epsilon_{t-1}) + \theta_1 E (\epsilon_t \epsilon_{t-2}) + \theta_1 E (\epsilon_{t-1}^2) + \theta_1^2 E (\epsilon_{t-1} \epsilon_{t-2}) \ && &= 0 + \theta_1 \cdot 0 + \theta_1 \sigma_{\epsilon_{i}}^2 + \theta_1^2 \cdot 0 \ && &= \theta_1 \sigma_{\epsilon_{i}}^2 \ && Cov(Y_t, Y_{t-2}) = \gamma_2 &= \text{E} \left[ (\epsilon_t + \theta_1 \epsilon_{t-1})(\epsilon_{t-2} + \theta_1 \epsilon_{t-3}) \right] \ && &= E (\epsilon_t \epsilon_{t-2}) + \theta_1 E (\epsilon_t \epsilon_{t-3}) + \theta_1 E (\epsilon_{t-1} \epsilon_{t-2}) + \theta_1^2 E (\epsilon_{t-1} \epsilon_{t-3}) \ && &= 0 + \theta_1 \cdot 0 + \theta_1 \cdot 0 + \theta_1^2 \cdot 0 \ && &= 0 \ \text{Autocorrelation} && Corr(Y_t, Y_{t-1}) = \rho_1 &= \dfrac{\theta_1}{1+\theta_1^2} \ && Corr(Y_t, Y_{t-i}) = \rho_i &= 0~~for~~i > 1 \ \end{align*}MA(2):

\begin{align*} \text{Main Equation} && Y_t &= \epsilon_t + \theta_1\epsilon_{t-1} + \theta_2\epsilon_{t-2} \ \text{Expectation} && E(Y_t) &= E(\epsilon_t + \theta_1\epsilon_{t-1} + \theta_2\epsilon_{t-2}) = E(\epsilon_t) + \theta_1E(\epsilon_{t-1}) + \theta_2E(\epsilon_{t-2}) = 0 \ \text{Variance} && Var(Y_t) &= E[(\epsilon_t + \theta_1\epsilon_{t-1} + \theta_2\epsilon_{t-2})^2] \ && &= \sigma_{\epsilon_i}^2 + \theta_1^2\sigma_{\epsilon_i}^2 + \theta_2^2\sigma_{\epsilon_i}^2 \ \text{Covariance} && Cov(Y_t, Y_{t-1}) = \gamma_1 &= \text{E} \left[ (\epsilon_t + \theta_1 \epsilon_{t-1} + \theta_2\epsilon_{t-2})(\epsilon_{t-1} + \theta_1 \epsilon_{t-2} + \theta_2\epsilon_{t-3}) \right] \ && &= (\theta_1 + \theta_1\theta_2) \sigma_{\epsilon_{i}}^2 \ && Cov(Y_t, Y_{t-2}) = \gamma_2 &= \text{E} \left[ (\epsilon_t + \theta_1 \epsilon_{t-1} + \theta_2\epsilon_{t-2})(\epsilon_{t-2} + \theta_1 \epsilon_{t-3} + \theta_2\epsilon_{t-4}) \right] \ && &= \theta_2 \sigma_{\epsilon_{i}}^2 \ && Cov(Y_t, Y_{t-i}) = \gamma_i &= 0~~for~~i > 2 \ \text{Autocorrelation} && Corr(Y_t, Y_{t-1}) = \rho_1 &= \dfrac{\theta_1 + \theta_1 \theta_2}{1+\theta_1^2+\theta_2^2} \ && Corr(Y_t, Y_{t-2}) = \rho_2 &= \dfrac{\theta_2}{1+\theta_1^2+\theta_2^2} \ && Corr(Y_t, Y_{t-i}) = \rho_i &= 0~~for~~i > 2 \ \end{align*}MA(q):

\begin{align*} \text{Autocorrelation} && Corr(Y_t, Y_{t-i}) = \rho_i &= \begin{cases} \dfrac{\theta_i + \theta_1\theta_{i-1} + \theta_2\theta_{i-2} + \cdots + \theta_q\theta_{i-q}}{1 + \theta_1^2 + \cdots + \theta_q^2} & \text{ for } i= 1, 2, \cdots, q \ 0 & \text{ for } i > q \ \end{cases} \end{align*}

움직임 특성:

Stationarity Condition of MA(1): \(|\theta_1| < 1\)

Stationarity Condition of MA(2): \(|\theta_2| < 1\), \(\theta_1 + \theta_2 > -1\), \(\theta_1 - \theta_2 < 1\)

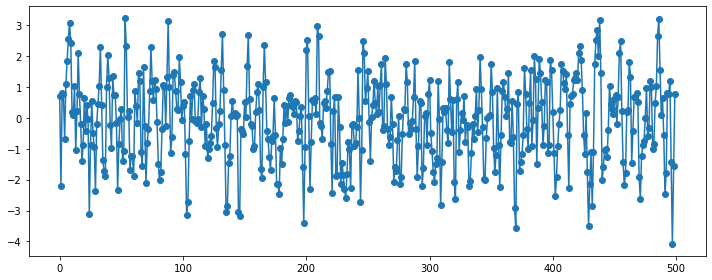

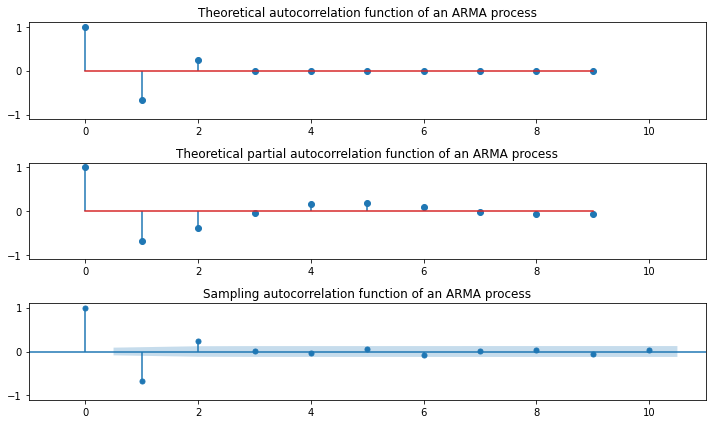

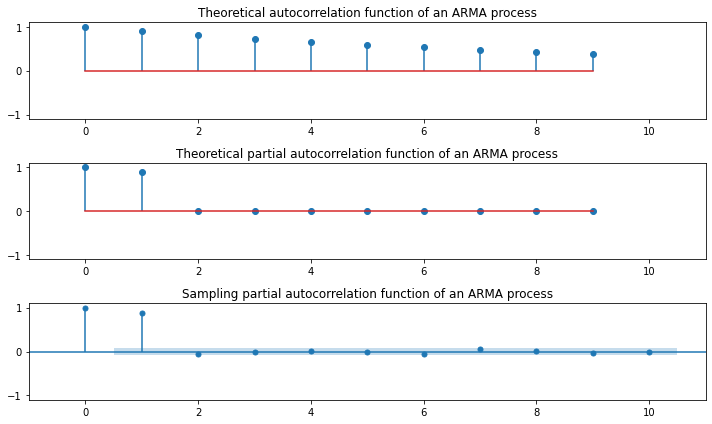

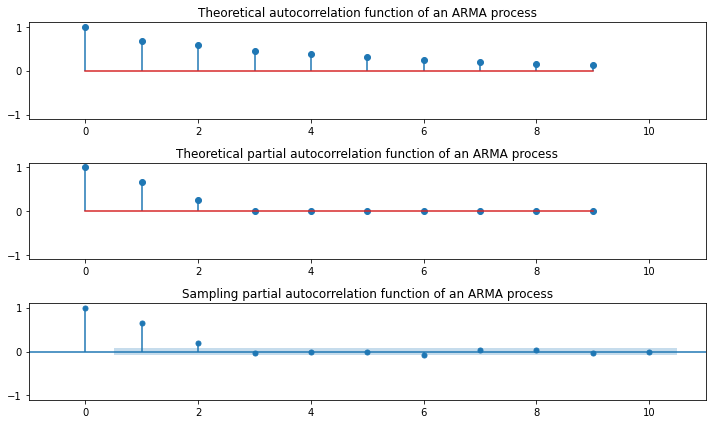

import numpy as np

import statsmodels.api as sm

import matplotlib.pyplot as plt

### MA(1)

plt.figure(figsize=(10, 4))

np.random.seed(123)

ar_params = np.array([])

ma_params = np.array([0.9])

ar, ma = np.r_[1, -ar_params], np.r_[1, ma_params]

y = sm.tsa.ArmaProcess(ar, ma).generate_sample(500, burnin=50)

plt.plot(y, 'o-')

plt.tight_layout()

plt.show()

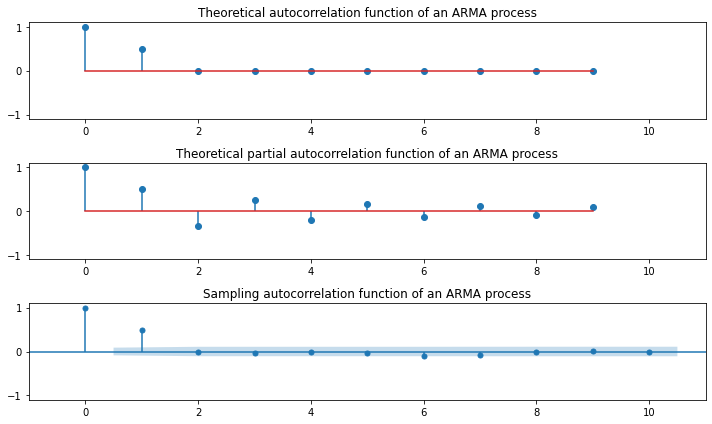

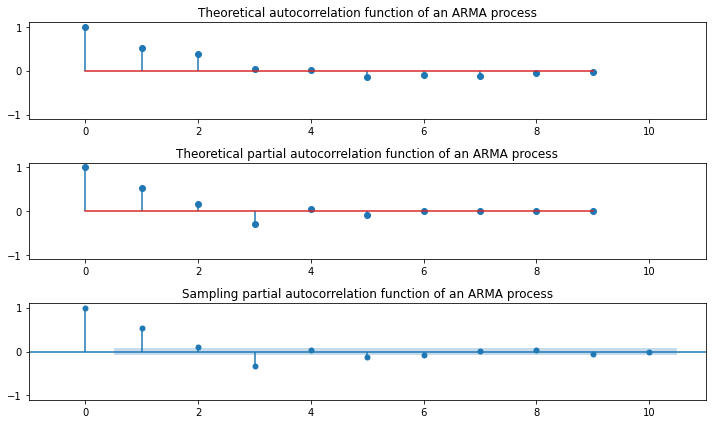

plt.figure(figsize=(10, 6))

plt.subplot(311)

plt.stem(sm.tsa.ArmaProcess(ar, ma).acf(lags=10))

plt.xlim(-1, 11)

plt.ylim(-1.1, 1.1)

plt.title("Theoretical autocorrelation function of an ARMA process")

plt.subplot(312)

plt.stem(sm.tsa.ArmaProcess(ar, ma).pacf(lags=10))

plt.xlim(-1, 11)

plt.ylim(-1.1, 1.1)

plt.title("Theoretical partial autocorrelation function of an ARMA process")

sm.graphics.tsa.plot_acf(y, lags=10, ax=plt.subplot(313))

plt.xlim(-1, 11)

plt.ylim(-1.1, 1.1)

plt.title("Sampling autocorrelation function of an ARMA process")

plt.tight_layout()

plt.show()

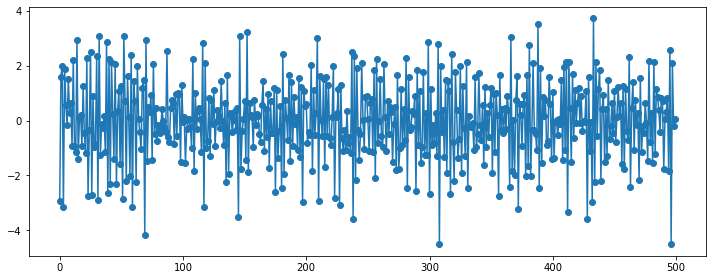

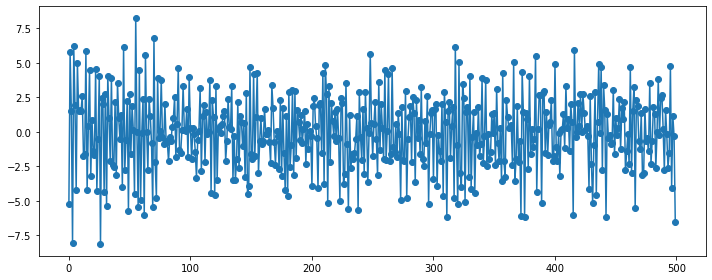

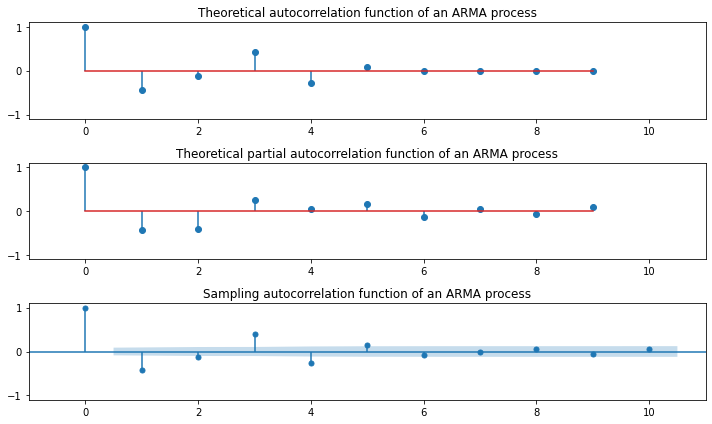

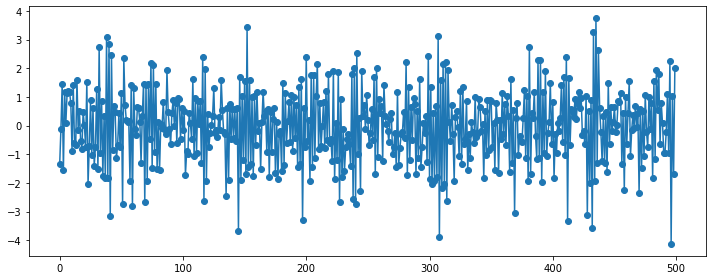

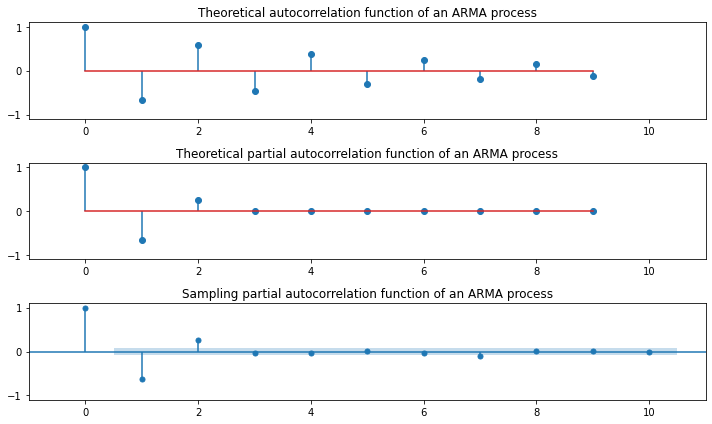

### MA(2)

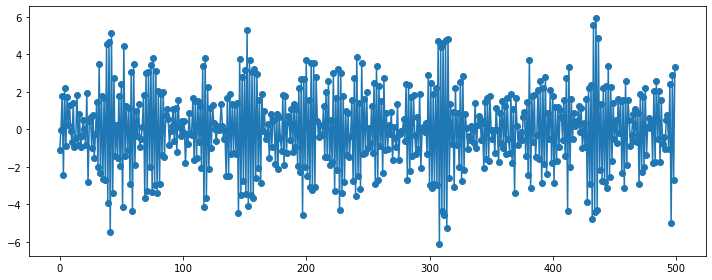

plt.figure(figsize=(10, 4))

np.random.seed(123)

ar_params = np.array([])

ma_params = np.array([-1, 0.6])

ar, ma = np.r_[1, -ar_params], np.r_[1, ma_params]

y = sm.tsa.ArmaProcess(ar, ma).generate_sample(500, burnin=50)

plt.plot(y, 'o-')

plt.tight_layout()

plt.show()

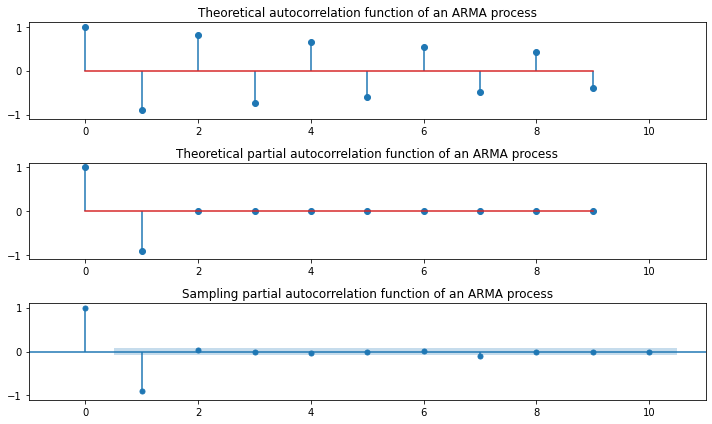

plt.figure(figsize=(10, 6))

plt.subplot(311)

plt.stem(sm.tsa.ArmaProcess(ar, ma).acf(lags=10))

plt.xlim(-1, 11)

plt.ylim(-1.1, 1.1)

plt.title("Theoretical autocorrelation function of an ARMA process")

plt.subplot(312)

plt.stem(sm.tsa.ArmaProcess(ar, ma).pacf(lags=10))

plt.xlim(-1, 11)

plt.ylim(-1.1, 1.1)

plt.title("Theoretical partial autocorrelation function of an ARMA process")

sm.graphics.tsa.plot_acf(y, lags=10, ax=plt.subplot(313))

plt.xlim(-1, 11)

plt.ylim(-1.1, 1.1)

plt.title("Sampling autocorrelation function of an ARMA process")

plt.tight_layout()

plt.show()

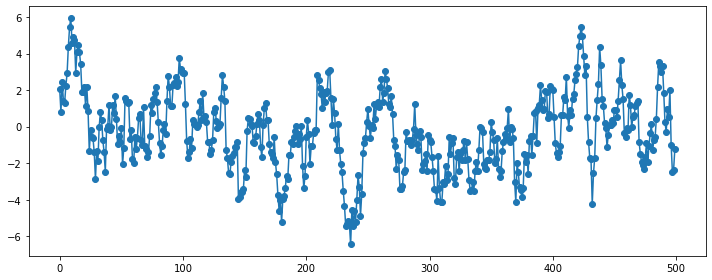

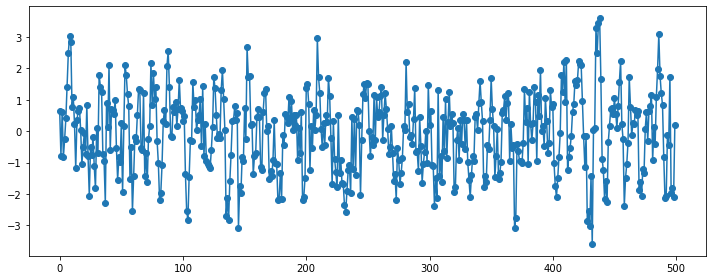

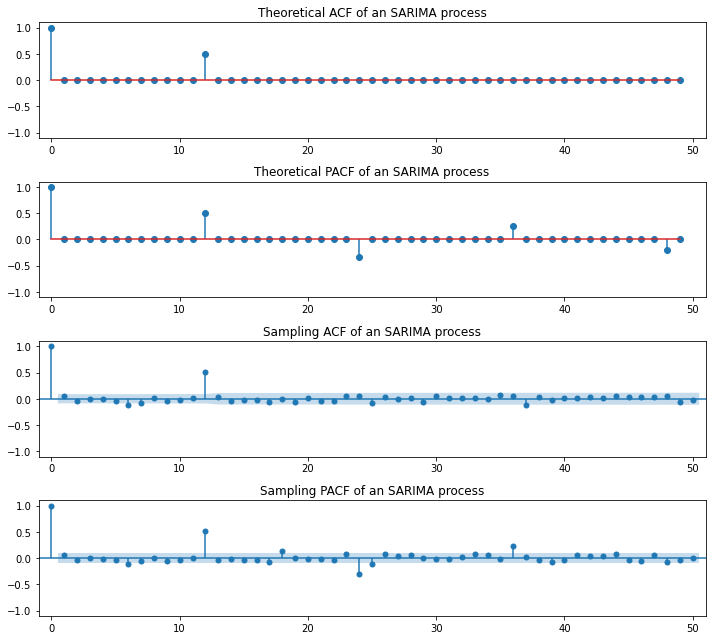

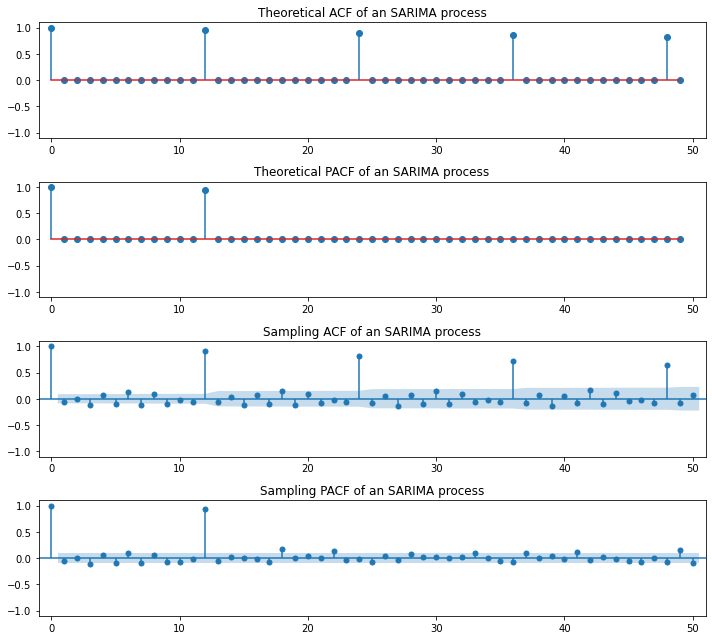

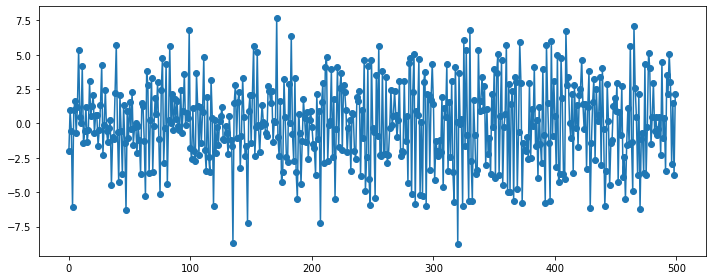

### MA(5)

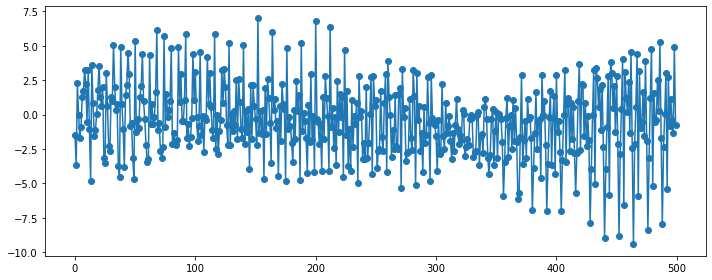

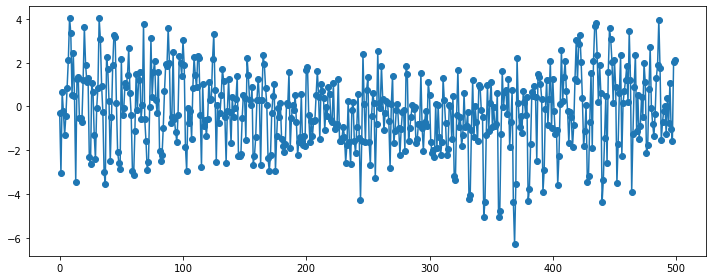

plt.figure(figsize=(10, 4))

np.random.seed(123)

ar_params = np.array([])

ma_params = np.array([-1, 1.6, 0.9, -1.5, 0.7])

ar, ma = np.r_[1, -ar_params], np.r_[1, ma_params]

y = sm.tsa.ArmaProcess(ar, ma).generate_sample(500, burnin=50)

plt.plot(y, 'o-')

plt.tight_layout()

plt.show()

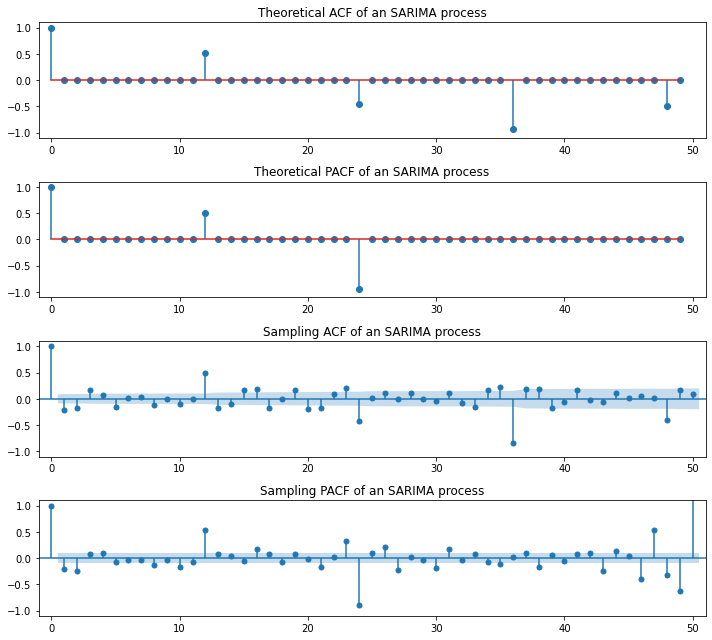

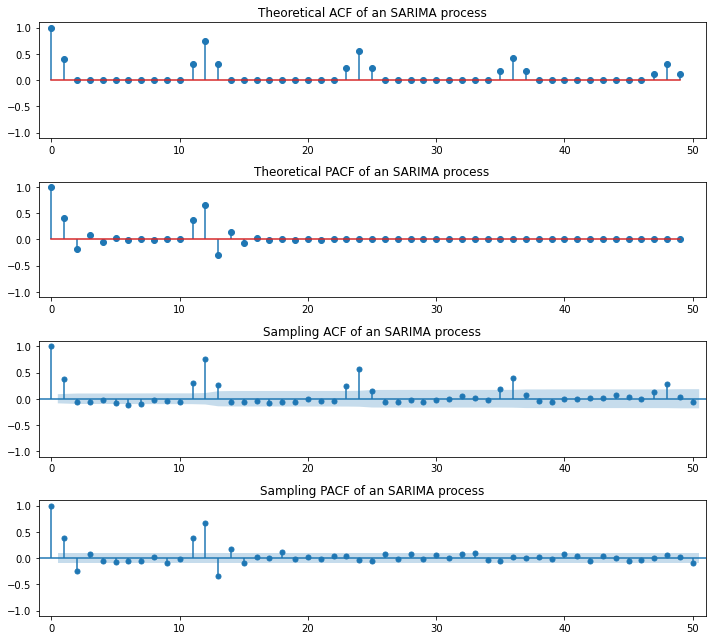

plt.figure(figsize=(10, 6))

plt.subplot(311)

plt.stem(sm.tsa.ArmaProcess(ar, ma).acf(lags=10))

plt.xlim(-1, 11)

plt.ylim(-1.1, 1.1)

plt.title("Theoretical autocorrelation function of an ARMA process")

plt.subplot(312)

plt.stem(sm.tsa.ArmaProcess(ar, ma).pacf(lags=10))

plt.xlim(-1, 11)

plt.ylim(-1.1, 1.1)

plt.title("Theoretical partial autocorrelation function of an ARMA process")

sm.graphics.tsa.plot_acf(y, lags=10, ax=plt.subplot(313))

plt.xlim(-1, 11)

plt.ylim(-1.1, 1.1)

plt.title("Sampling autocorrelation function of an ARMA process")

plt.tight_layout()

plt.show()

AR(Auto-Regressive)¶

“\(AR(p)\): 알고리즘의 차수(\(p\))가 유한한 자기자신의 과거값들의 선형조합”

필요성: ACF가 시차(Lag)가 증가해도 0이 되지 않고 오랜시간 남아있는 경우에 \(MA\)모형을 사용하면 차수가 \(\infty\)로 감

\begin{align*} Y_t &= \phi_1Y_{t-1} + \phi_2Y_{t-2} + \cdots + \phi_pY_{t-p} + \epsilon_t \ \end{align*} \begin{align*} where~\epsilon_i \sim i.i.d.~WN(0, \sigma_{\epsilon_i}^2)~and~\displaystyle \sum_{i=1}^{\infty}\phi_i^2 < \infty \end{align*} \begin{align*} Y_t &= \phi_1Y_{t-1} + \phi_2Y_{t-2} + \cdots + \phi_pY_{t-p} + \epsilon_t \ Y_t - \phi_1Y_{t-1} - \phi_2Y_{t-2} - \cdots - \phi_pY_{t-p} &= \epsilon_t \ Y_t - \phi_1LY_t - \phi_2L^2Y_t - \cdots - \phi_pL^pY_t &= \epsilon_t \ (1 - \phi_1L - \phi_2L^2 - \cdots - \phi_pL^p)Y_t &= \epsilon_t \ \phi(L)Y_t &= \epsilon_t \ \end{align*} \begin{align*} where~Y_{t-1} = LY_t~and~Y_{t-2} = L^2Y_t \end{align*}

AR(1):

\begin{align*} \text{Main Equation} && Y_t &= \phi_1 Y_{t-1} + \epsilon_t \ && &= \phi_1 (\phi_1 Y_{t-2} + \epsilon_{t-1}) + \epsilon_t \ && &= \phi_1^2 Y_{t-2} + \phi_1 \epsilon_{t-1} + \epsilon_t \ && &= \phi_1^2 (\phi_1 Y_{t-3} + \epsilon_{t-2}) + \phi_1 \epsilon_{t-1} + \epsilon_t \ && &= \phi_1^3 Y_{t-3} + \phi_1^2 \epsilon_{t-2} + \phi_1 \epsilon_{t-1} + \epsilon_t \ && & \vdots \ && &= \epsilon_t + \phi_1 \epsilon_{t-1} +\phi_1^2 \epsilon_{t-2} + \phi_1^3 \epsilon_{t-3} + \cdots \ && &= MA(\infty) \ \text{Expectation} && E(Y_t) &= \mu = E(\phi_1 Y_{t-1} + \epsilon_t) = \phi_1 E(Y_{t-1}) + E(\epsilon_{t}) = \phi_1 \mu + 0 \ && (1-\phi_1)\mu &= 0 \ && \mu &= 0~~if~~\phi_1 \neq 1 \ \text{Variance} && Var(Y_t) &= \gamma_0 = E(Y_t^2) = E[(\phi_1 Y_{t-1} + \epsilon_t)^2] = E[ \phi_1^2 Y_{t-1}^2 + 2\phi_1 Y_{t-1} \epsilon_{t} + \epsilon_{t}^2] \ && &= \phi_1^2 E[ Y_{t-1}^2 ] + 2 \phi E[ Y_{t-1} \epsilon_{t} ] + E[ \epsilon_{t}^2 ] \ && &= \phi_1^2 \gamma_0 + 0 + \sigma_{\epsilon_i}^2 \ && (1-\phi_1^2)\gamma_0 &= \sigma_{\epsilon_i}^2 \ && \gamma_0 &= \dfrac{\sigma_{\epsilon_i}^2}{1-\phi_1^2}~~if~~\phi_1^2 \neq 1 \ \text{Covariance} && Cov(Y_t, Y_{t-1}) &= \gamma_1 = E [(\phi_1 Y_{t-1} + \epsilon_t)(\phi_1 Y_{t-2} + \epsilon_{t-1})] \ && &= \phi_1^2E (Y_{t-1} Y_{t-2}) + \phi_1 E (Y_{t-1} \epsilon_{t-1}) + \phi_1 E (\epsilon_{t} Y_{t-2}) + E (\epsilon_{t} \epsilon_{t-1}) \ && &= \phi_1^2\gamma_1 + \phi_1 \sigma_{\epsilon_{i}}^2 + \phi_1 \cdot 0 + 0 \ && (1 - \phi_1^2)\gamma_1 &= \phi_1 \sigma_{\epsilon_{i}}^2 \ && \gamma_1 &= \dfrac{\phi_1 \sigma_{\epsilon_{i}}^2}{1 - \phi_1^2} \ && Cov(Y_t, Y_{t-2}) &= \gamma_2 = E [(\phi_1 Y_{t-1} + \epsilon_t)(\phi_1 Y_{t-3} + \epsilon_{t-2})] \ && &= \phi_1^2E (Y_{t-1} Y_{t-3}) + \phi_1 E (Y_{t-1} \epsilon_{t-2}) + \phi_1 E (\epsilon_{t} Y_{t-3}) + E (\epsilon_{t} \epsilon_{t-2}) \ && &= \phi_1^2\gamma_2 + \phi_1 E[(\phi_1Y_{t-2}+\epsilon_{t-1})\epsilon_{t-2}] + \phi_1 \cdot 0 + 0 \ && &= \phi_1^2\gamma_2 + \phi_1^2 E(Y_{t-2}\epsilon_{t-2}) + \phi_1 E(\epsilon_{t-1}\epsilon_{t-2}) \ && &= \phi_1^2\gamma_2 + \phi_1^2 \sigma_{\epsilon_{i}}^2 + 0 \ && (1 - \phi_1^2)\gamma_2 &= \phi_1^2 \sigma_{\epsilon_{i}}^2 \ && \gamma_2 &= \dfrac{\phi_1^2 \sigma_{\epsilon_{i}}^2}{1 - \phi_1^2} \ \text{Autocorrelation} && Corr(Y_t, Y_{t-1}) = \rho_1 &= \phi_1 \ && Corr(Y_t, Y_{t-2}) = \rho_2 &= \phi_1^2 \ && Corr(Y_t, Y_{t-i}) = \rho_i &= \phi_1^i \ \end{align*}

움직임 특성:

\(\phi_1 = 0\): \(Y_t\)는 백색잡음

\(\phi_1 < 0\): 부호를 바꿔가면서(진동하면서) 지수적으로 감소

\(\phi_1 > 0\): 시차가 증가하면서 자기상관계수는 지수적으로 감소

\(\phi_1 = 1\): \(Y_t\)는 비정상성인 랜덤워크(Random Walk) \begin{align*} Y_t &= Y_{t-1} + \epsilon_t \ Var(Y_t) &= Var(Y_{t-1} + \epsilon_t) \ &= Var(Y_{t-1}) + Var(\epsilon_t) ;; (\text{independence}) \ Var(Y_t) &> Var(Y_{t-1}) \end{align*}

Stationarity Condition: \(|\phi_1| < 1\)

AR(2):

\begin{align*} \text{Main Equation} && Y_t &= \phi_1 Y_{t-1} + \phi_2 Y_{t-2} + \epsilon_t \ && &= \phi_1 (\phi_1 Y_{t-2} + \epsilon_{t-1}) + \phi_2 (\phi_2 Y_{t-3} + \epsilon_{t-2}) + \epsilon_t \ && &= \phi_1^2 Y_{t-2} + \phi_1 \epsilon_{t-1} + \phi_2^2 Y_{t-3} + \phi_2 \epsilon_{t-2} + \epsilon_t \ && &= \phi_1^2 (\phi_1 Y_{t-3} + \phi_2 Y_{t-4} + \epsilon_{t-3}) + \phi_1 \epsilon_{t-1} + \phi_2^2 (\phi_1 Y_{t-4} + \phi_2 Y_{t-5} + \epsilon_{t-4}) + \phi_2 \epsilon_{t-2} + \epsilon_t \ && & \vdots \ && &= \epsilon_t + \phi_1 \epsilon_{t-1} + \phi_2 \epsilon_{t-1} + \phi_1^2 \epsilon_{t-2} + \phi_2^2 \epsilon_{t-2} + \phi_1^3 \epsilon_{t-3} + \phi_2^3 \epsilon_{t-3} + \cdots \ && &= MA(\infty) \ \text{Expectation} && E(Y_t) &= \mu = E(\phi_1 Y_{t-1} + \phi_2 Y_{t-2} + \epsilon_t) = \phi_1 E(Y_{t-1}) + \phi_2 E(Y_{t-2}) + E(\epsilon_{t}) = \phi_1 \mu + \phi_2 \mu + 0 \ && (1-\phi_1-\phi_2)\mu &= 0 \ && \mu &= 0~~if~~\phi_1+\phi_2 \neq 1 \ \text{Covariance(“Yule-Walker Equation”)} && \gamma_i &= E(Y_tY_{t-i}) = E[(\phi_1 Y_{t-1} + \phi_2 Y_{t-2} + \epsilon_t)Y_{t-i}] \ && &= E(\phi_1 Y_{t-1}Y_{t-i}) + E(\phi_2 Y_{t-2}Y_{t-i}) + E(\epsilon_t Y_{t-i}) \ && &= \phi_1 \gamma_{i-1} + \phi_2 \gamma_{i-2} \ \text{Autocorrelation} && Corr(Y_t, Y_{t-i}) &= \rho_i = \phi_1 \rho_{i-1} + \phi_2 \rho_{i-2} \ && \rho_1 &= \phi_1 \rho_{0} + \phi_2 \rho_{-1} = \phi_1 \cdot 1 + \phi_2 \rho_{1} \ && (1-\phi_2)\rho_1 &= \phi_1 \ && \rho_1 &= \dfrac{\phi_1}{1-\phi_2} \ && & \vdots \ && \rho_2 &= \dfrac{\phi_1^2 + \phi_2(1-\phi_2)}{1-\phi_2} \ && & \vdots \ && \rho_i &= \left( 1+\dfrac{1+\phi_2}{1-\phi_2} \cdot i \right)\left(\dfrac{\phi_1}{2} \right)^i \ \end{align*}

움직임 특성:

시차가 증가하면서 자기상관계수의 절대값은 지수적으로 감소

진동 주파수에 따라 다르지만 진동 가능

Stationarity Condition: \(|\phi_1| < 1\), \(\phi_1 + \phi_2 < 1\), \(\phi_2 - \phi_1 < 1\)

import numpy as np

import statsmodels.api as sm

import matplotlib.pyplot as plt

### AR(1)

plt.figure(figsize=(10, 4))

np.random.seed(123)

ar_params = np.array([0.9])

ma_params = np.array([])

ar, ma = np.r_[1, -ar_params], np.r_[1, ma_params]

y = sm.tsa.ArmaProcess(ar, ma).generate_sample(500, burnin=50)

plt.plot(y, 'o-')

plt.tight_layout()

plt.show()

plt.figure(figsize=(10, 6))

plt.subplot(311)

plt.stem(sm.tsa.ArmaProcess(ar, ma).acf(lags=10))

plt.xlim(-1, 11)

plt.ylim(-1.1, 1.1)

plt.title("Theoretical autocorrelation function of an ARMA process")

plt.subplot(312)

plt.stem(sm.tsa.ArmaProcess(ar, ma).pacf(lags=10))

plt.xlim(-1, 11)

plt.ylim(-1.1, 1.1)

plt.title("Theoretical partial autocorrelation function of an ARMA process")

sm.graphics.tsa.plot_pacf(y, lags=10, ax=plt.subplot(313))

plt.xlim(-1, 11)

plt.ylim(-1.1, 1.1)

plt.title("Sampling partial autocorrelation function of an ARMA process")

plt.tight_layout()

plt.show()

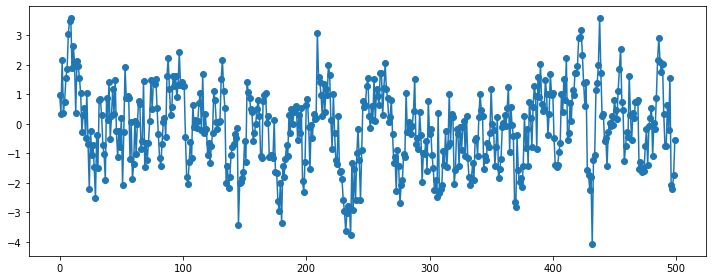

### AR(1)

plt.figure(figsize=(10, 4))

np.random.seed(123)

ar_params = np.array([-0.9])

ma_params = np.array([])

ar, ma = np.r_[1, -ar_params], np.r_[1, ma_params]

y = sm.tsa.ArmaProcess(ar, ma).generate_sample(500, burnin=50)

plt.plot(y, 'o-')

plt.tight_layout()

plt.show()

plt.figure(figsize=(10, 6))

plt.subplot(311)

plt.stem(sm.tsa.ArmaProcess(ar, ma).acf(lags=10))

plt.xlim(-1, 11)

plt.ylim(-1.1, 1.1)

plt.title("Theoretical autocorrelation function of an ARMA process")

plt.subplot(312)

plt.stem(sm.tsa.ArmaProcess(ar, ma).pacf(lags=10))

plt.xlim(-1, 11)

plt.ylim(-1.1, 1.1)

plt.title("Theoretical partial autocorrelation function of an ARMA process")

sm.graphics.tsa.plot_pacf(y, lags=10, ax=plt.subplot(313))

plt.xlim(-1, 11)

plt.ylim(-1.1, 1.1)

plt.title("Sampling partial autocorrelation function of an ARMA process")

plt.tight_layout()

plt.show()

### AR(2)

plt.figure(figsize=(10, 4))

np.random.seed(123)

ar_params = np.array([0.5, 0.25])

ma_params = np.array([])

ar, ma = np.r_[1, -ar_params], np.r_[1, ma_params]

y = sm.tsa.ArmaProcess(ar, ma).generate_sample(500, burnin=50)

plt.plot(y, 'o-')

plt.tight_layout()

plt.show()

plt.figure(figsize=(10, 6))

plt.subplot(311)

plt.stem(sm.tsa.ArmaProcess(ar, ma).acf(lags=10))

plt.xlim(-1, 11)

plt.ylim(-1.1, 1.1)

plt.title("Theoretical autocorrelation function of an ARMA process")

plt.subplot(312)

plt.stem(sm.tsa.ArmaProcess(ar, ma).pacf(lags=10))

plt.xlim(-1, 11)

plt.ylim(-1.1, 1.1)

plt.title("Theoretical partial autocorrelation function of an ARMA process")

sm.graphics.tsa.plot_pacf(y, lags=10, ax=plt.subplot(313))

plt.xlim(-1, 11)

plt.ylim(-1.1, 1.1)

plt.title("Sampling partial autocorrelation function of an ARMA process")

plt.tight_layout()

plt.show()

### AR(2)

plt.figure(figsize=(10, 4))

np.random.seed(123)

ar_params = np.array([-0.5, 0.25])

ma_params = np.array([])

ar, ma = np.r_[1, -ar_params], np.r_[1, ma_params]

y = sm.tsa.ArmaProcess(ar, ma).generate_sample(500, burnin=50)

plt.plot(y, 'o-')

plt.tight_layout()

plt.show()

plt.figure(figsize=(10, 6))

plt.subplot(311)

plt.stem(sm.tsa.ArmaProcess(ar, ma).acf(lags=10))

plt.xlim(-1, 11)

plt.ylim(-1.1, 1.1)

plt.title("Theoretical autocorrelation function of an ARMA process")

plt.subplot(312)

plt.stem(sm.tsa.ArmaProcess(ar, ma).pacf(lags=10))

plt.xlim(-1, 11)

plt.ylim(-1.1, 1.1)

plt.title("Theoretical partial autocorrelation function of an ARMA process")

sm.graphics.tsa.plot_pacf(y, lags=10, ax=plt.subplot(313))

plt.xlim(-1, 11)

plt.ylim(-1.1, 1.1)

plt.title("Sampling partial autocorrelation function of an ARMA process")

plt.tight_layout()

plt.show()

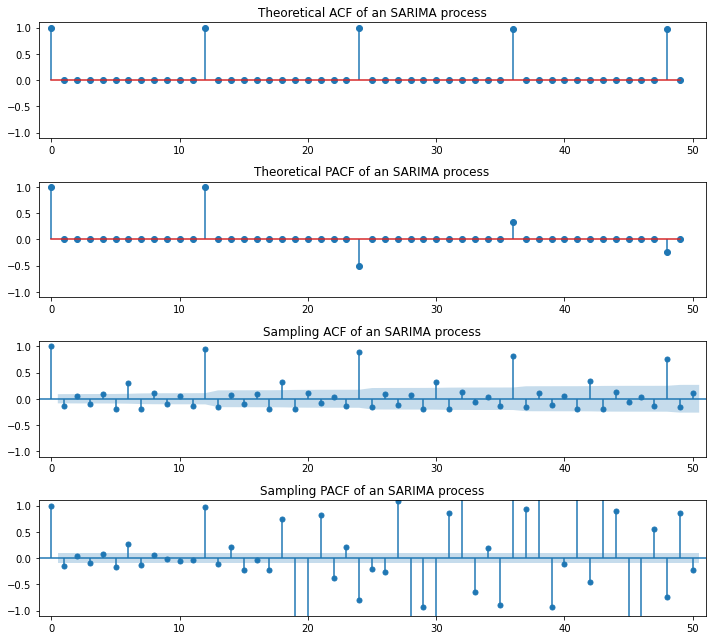

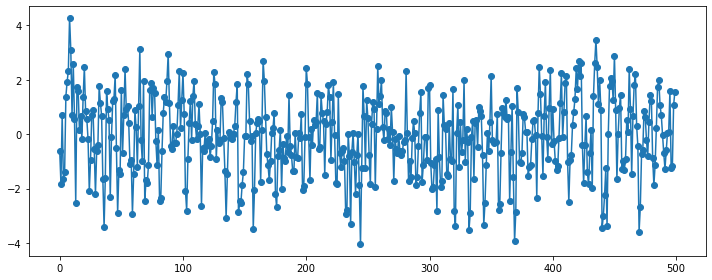

### AR(5)

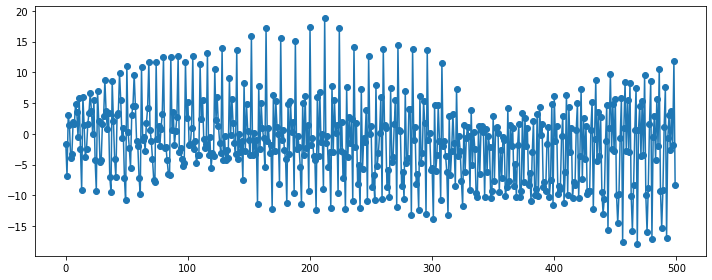

plt.figure(figsize=(10, 4))

np.random.seed(123)

ar_params = np.array([0.5, 0.25, -0.3, 0.1, -0.1])

ma_params = np.array([])

ar, ma = np.r_[1, -ar_params], np.r_[1, ma_params]

y = sm.tsa.ArmaProcess(ar, ma).generate_sample(500, burnin=50)

plt.plot(y, 'o-')

plt.tight_layout()

plt.show()

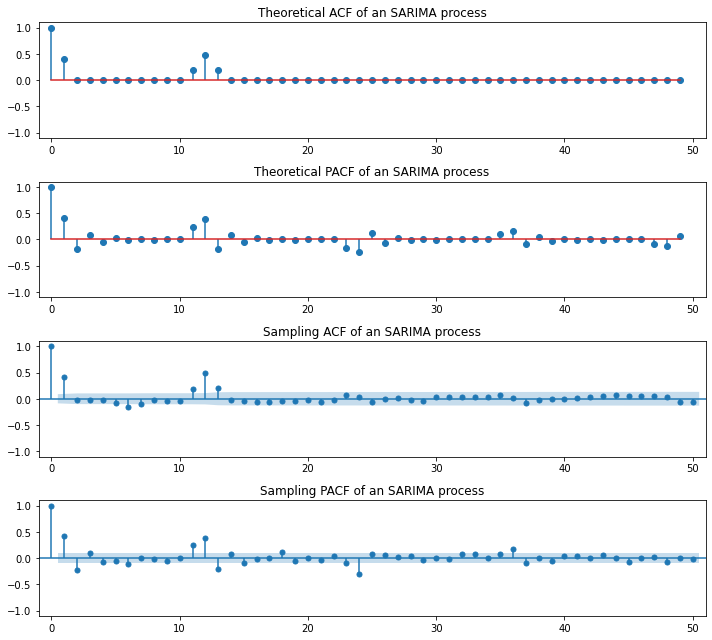

plt.figure(figsize=(10, 6))

plt.subplot(311)

plt.stem(sm.tsa.ArmaProcess(ar, ma).acf(lags=10))

plt.xlim(-1, 11)

plt.ylim(-1.1, 1.1)

plt.title("Theoretical autocorrelation function of an ARMA process")

plt.subplot(312)

plt.stem(sm.tsa.ArmaProcess(ar, ma).pacf(lags=10))

plt.xlim(-1, 11)

plt.ylim(-1.1, 1.1)

plt.title("Theoretical partial autocorrelation function of an ARMA process")

sm.graphics.tsa.plot_pacf(y, lags=10, ax=plt.subplot(313))

plt.xlim(-1, 11)

plt.ylim(-1.1, 1.1)

plt.title("Sampling partial autocorrelation function of an ARMA process")

plt.tight_layout()

plt.show()

Relation of MA and AR¶

가역성 조건(Invertibility Condition):

1) \(MA(q)\) -> \(AR(\infty)\): 변환 후 AR 모형이 Stationary Condition이면 “Invertibility”

2) \(AR(p)\) -> \(MA(\infty)\): 여러개 모형변환 가능하지만 “Invertibility” 조건을 만족하는 MA 모형은 단 1개만 존재

ARMA(Auto-Regressive Moving Average)¶

“\(ARMA(p,q)\): 알고리즘의 차수(\(p~and~q\))가 유한한 \(AR(p)\)와 \(MA(q)\)의 선형조합”

\(AR\)과 \(MA\)의 정상성 조건과 가역성 조건이 동일하게 \(ARMA\) 알고리즘들에 적용

종속변수 \(Y_t\)는 종속변수 \(Y_t\)와 백색잡음(\(\epsilon_t\)) 차분들(Lagged Variables)의 합으로 예측가능

\begin{align*} Y_t = \phi_1Y_{t-1} + \phi_2Y_{t-2} + \cdots + \phi_pY_{t-p} + \theta_1\epsilon_{t-1} + \theta_2\epsilon_{t-2} + \cdots + \theta_q\epsilon_{t-q} + \epsilon_t \ \end{align*} \begin{align*} where~\epsilon_i \sim i.i.d.~WN(0, \sigma_{\epsilon_i}^2)~and~\displaystyle \sum_{i=1}^{\infty}\phi_i^2 < \infty, \displaystyle \sum_{i=1}^{\infty}\theta_i^2 < \infty \end{align*} \begin{align*} \phi(L)Y_t &= \theta(L)\epsilon_t \ Y_t &= \dfrac{\theta(L)}{\phi(L)}\epsilon_t \ \end{align*}

\begin{align*} \text{Main Equation} && Y_t &= \dfrac{\theta(L)}{\phi(L)}\epsilon_t \ && &= \psi(L)\epsilon_t \text{ where } \psi(L) = \dfrac{\theta(L)}{\phi(L)} \ && &= (1 + \psi_1L + \psi_2L^2 + \cdots)\epsilon_t \ && &= \epsilon_t + \psi_1\epsilon_{t-1} + \psi_2\epsilon_{t-2} + \cdots \ && & \text{ where } \ && \psi_1 &= \theta_1 - \phi_1 \ && \psi_2 &= \theta_2 - \phi_2 - \phi_1 \psi_1 \ && & \vdots \ && \psi_j &= \theta_j - \phi_p\psi_{j-p} - \phi_2 \psi_{p-1} - \cdots - \phi_1 \psi_{j-1} \ \text{Autocorrelation(“Yule-Walker Equation”)} && \rho_i &= \phi_1 \rho_{i-1} + \cdots + \phi_p \rho_{i-p} \ \end{align*}

import pandas as pd

import numpy as np

import statsmodels

import statsmodels.api as sm

import matplotlib.pyplot as plt

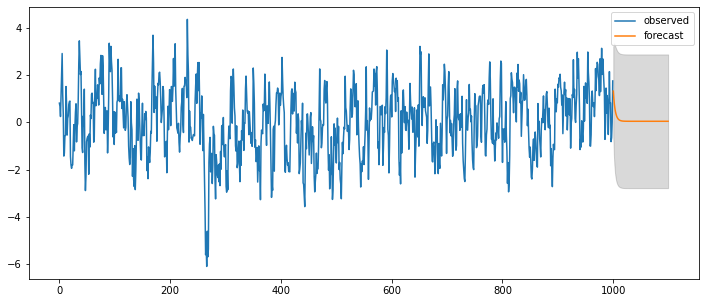

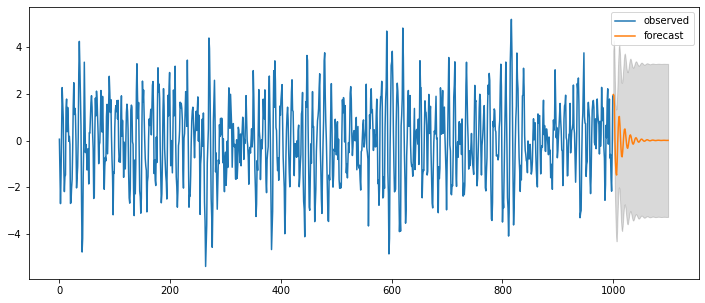

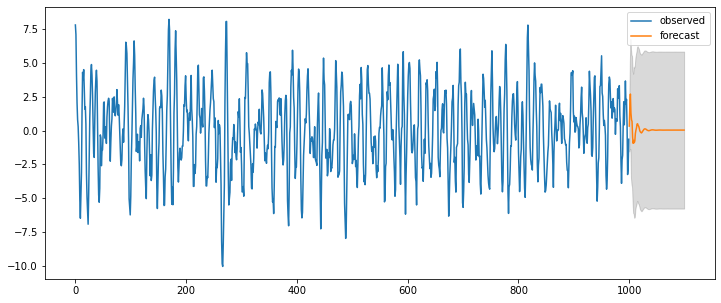

### ARMA(1,0) = AR(1)

# Setting

np.random.seed(123)

ar_params = np.array([0.75])

ma_params = np.array([])

index_name = ['const', 'ar(1)']

ahead = 100

ar, ma = np.r_[1, -ar_params], np.r_[1, ma_params]

ar_order, ma_order = len(ar)-1, len(ma)-1

# Generator

y = statsmodels.tsa.arima_process.arma_generate_sample(ar, ma, nsample=1000, burnin=500)

fit = statsmodels.tsa.arima_model.ARMA(y, (ar_order,ma_order)).fit(trend='c', disp=0)

pred_ts_point = fit.forecast(steps=ahead)[0]

pred_ts_interval = fit.forecast(steps=ahead)[2]

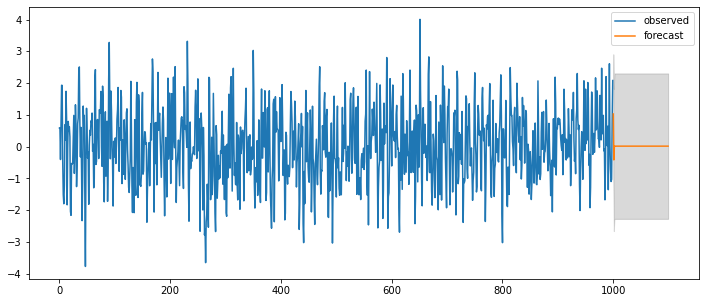

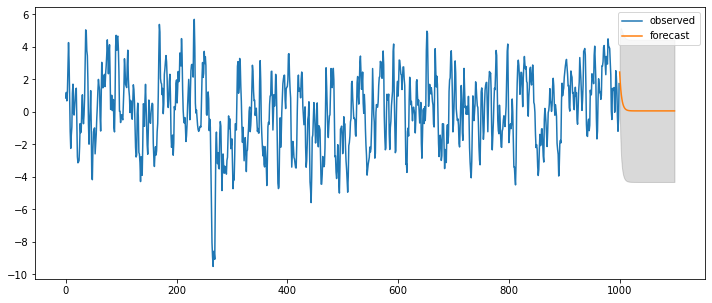

ax = pd.DataFrame(y).plot(figsize=(12,5))

forecast_index = [i for i in range(pd.DataFrame(y).index.max()+1, pd.DataFrame(y).index.max()+ahead+1)]

pd.DataFrame(pred_ts_point, index=forecast_index).plot(label='forecast', ax=ax)

ax.fill_between(pd.DataFrame(pred_ts_interval, index=forecast_index).index,

pd.DataFrame(pred_ts_interval, index=forecast_index).iloc[:,0],

pd.DataFrame(pred_ts_interval, index=forecast_index).iloc[:,1], color='k', alpha=0.15)

plt.legend(['observed', 'forecast'])

display(fit.summary2())

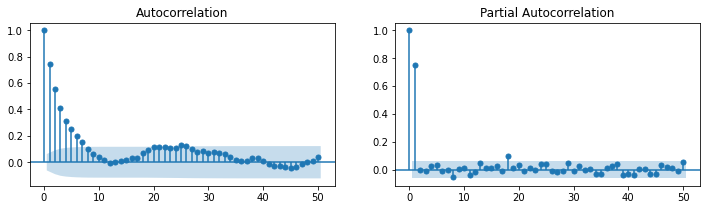

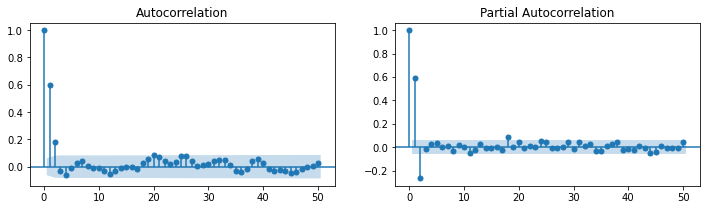

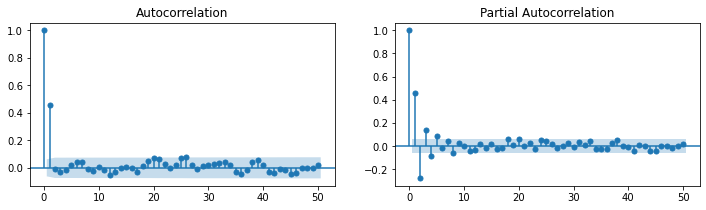

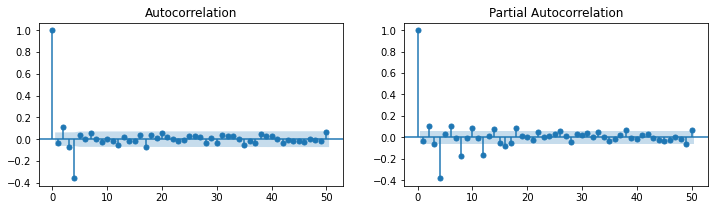

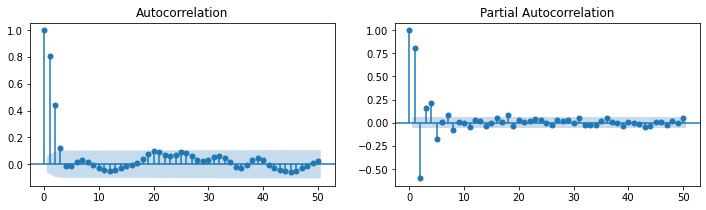

plt.figure(figsize=(12,3))

statsmodels.graphics.tsaplots.plot_acf(y, lags=50, zero=True, use_vlines=True, alpha=0.05, ax=plt.subplot(121))

statsmodels.graphics.tsaplots.plot_pacf(y, lags=50, zero=True, use_vlines=True, alpha=0.05, ax=plt.subplot(122))

plt.show()

| Model: | ARMA | BIC: | 2775.2536 |

| Dependent Variable: | y | Log-Likelihood: | -1377.3 |

| Date: | 2020-07-31 22:45 | Scale: | 1.0000 |

| No. Observations: | 1000 | Method: | css-mle |

| Df Model: | 2 | Sample: | 0 |

| Df Residuals: | 998 | 0 | |

| Converged: | 1.0000 | S.D. of innovations: | 0.959 |

| No. Iterations: | 4.0000 | HQIC: | 2766.126 |

| AIC: | 2760.5304 |

| Coef. | Std.Err. | t | P>|t| | [0.025 | 0.975] | |

|---|---|---|---|---|---|---|

| const | 0.0406 | 0.1197 | 0.3389 | 0.7347 | -0.1940 | 0.2752 |

| ar.L1.y | 0.7475 | 0.0210 | 35.6193 | 0.0000 | 0.7063 | 0.7886 |

| Real | Imaginary | Modulus | Frequency | |

|---|---|---|---|---|

| AR.1 | 1.3379 | 0.0000 | 1.3379 | 0.0000 |

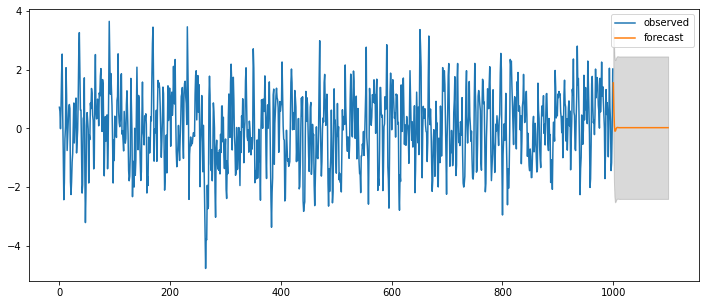

### ARMA(2,0) = AR(2)

# Setting

np.random.seed(123)

ar_params = np.array([0.75, -0.25])

ma_params = np.array([])

index_name = ['const', 'ar(1)', 'ar(2)']

ahead = 100

ar, ma = np.r_[1, -ar_params], np.r_[1, ma_params]

ar_order, ma_order = len(ar)-1, len(ma)-1

# Generator

y = statsmodels.tsa.arima_process.arma_generate_sample(ar, ma, nsample=1000, burnin=500)

fit = statsmodels.tsa.arima_model.ARMA(y, (ar_order,ma_order)).fit(trend='c', disp=0)

pred_ts_point = fit.forecast(steps=ahead)[0]

pred_ts_interval = fit.forecast(steps=ahead)[2]

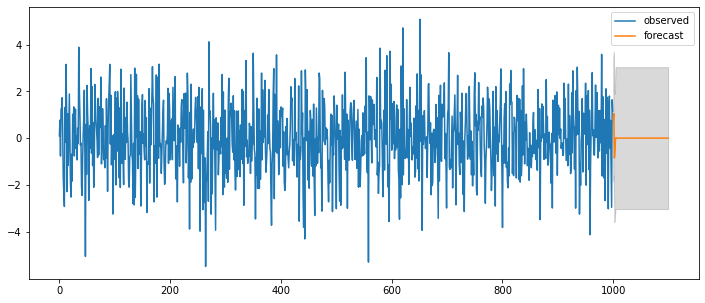

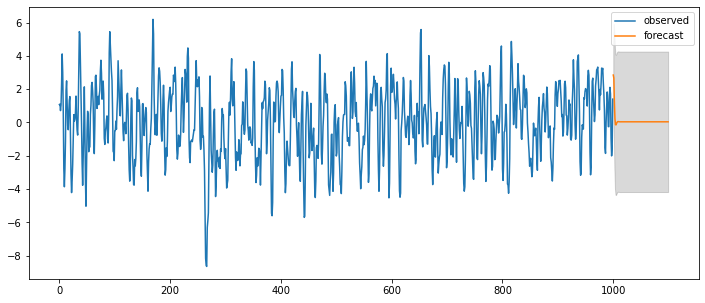

ax = pd.DataFrame(y).plot(figsize=(12,5))

forecast_index = [i for i in range(pd.DataFrame(y).index.max()+1, pd.DataFrame(y).index.max()+ahead+1)]

pd.DataFrame(pred_ts_point, index=forecast_index).plot(label='forecast', ax=ax)

ax.fill_between(pd.DataFrame(pred_ts_interval, index=forecast_index).index,

pd.DataFrame(pred_ts_interval, index=forecast_index).iloc[:,0],

pd.DataFrame(pred_ts_interval, index=forecast_index).iloc[:,1], color='k', alpha=0.15)

plt.legend(['observed', 'forecast'])

display(fit.summary2())

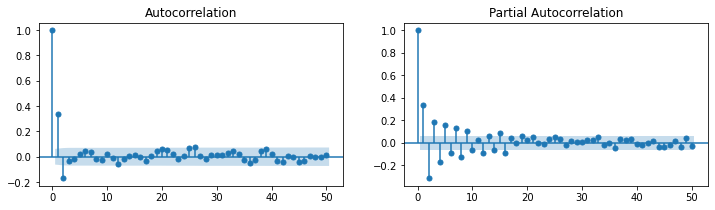

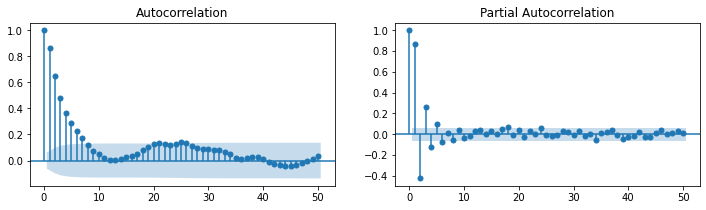

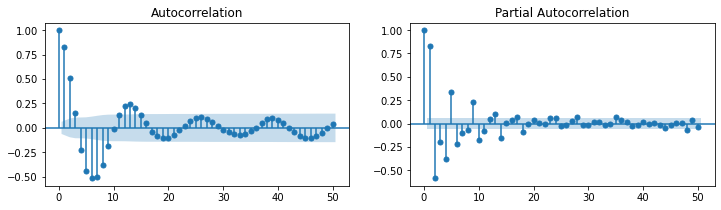

plt.figure(figsize=(12,3))

statsmodels.graphics.tsaplots.plot_acf(y, lags=50, zero=True, use_vlines=True, alpha=0.05, ax=plt.subplot(121))

statsmodels.graphics.tsaplots.plot_pacf(y, lags=50, zero=True, use_vlines=True, alpha=0.05, ax=plt.subplot(122))

plt.show()

| Model: | ARMA | BIC: | 2781.5944 |

| Dependent Variable: | y | Log-Likelihood: | -1377.0 |

| Date: | 2020-07-31 22:45 | Scale: | 1.0000 |

| No. Observations: | 1000 | Method: | css-mle |

| Df Model: | 3 | Sample: | 0 |

| Df Residuals: | 997 | 0 | |

| Converged: | 1.0000 | S.D. of innovations: | 0.959 |

| No. Iterations: | 5.0000 | HQIC: | 2769.424 |

| AIC: | 2761.9633 |

| Coef. | Std.Err. | t | P>|t| | [0.025 | 0.975] | |

|---|---|---|---|---|---|---|

| const | 0.0193 | 0.0592 | 0.3255 | 0.7448 | -0.0967 | 0.1352 |

| ar.L1.y | 0.7557 | 0.0305 | 24.7647 | 0.0000 | 0.6959 | 0.8155 |

| ar.L2.y | -0.2678 | 0.0305 | -8.7791 | 0.0000 | -0.3276 | -0.2080 |

| Real | Imaginary | Modulus | Frequency | |

|---|---|---|---|---|

| AR.1 | 1.4111 | -1.3203 | 1.9324 | -0.1197 |

| AR.2 | 1.4111 | 1.3203 | 1.9324 | 0.1197 |

### ARMA(4,0) = AR(4)

# Setting

np.random.seed(123)

ar_params = np.array([0.75, -0.25, 0.2, -0.5])

ma_params = np.array([])

index_name = ['const', 'ar(1)', 'ar(2)', 'ar(3)', 'ar(4)']

ahead = 100

ar, ma = np.r_[1, -ar_params], np.r_[1, ma_params]

ar_order, ma_order = len(ar)-1, len(ma)-1

# Generator

y = statsmodels.tsa.arima_process.arma_generate_sample(ar, ma, nsample=1000, burnin=500)

fit = statsmodels.tsa.arima_model.ARMA(y, (ar_order,ma_order)).fit(trend='c', disp=0)

pred_ts_point = fit.forecast(steps=ahead)[0]

pred_ts_interval = fit.forecast(steps=ahead)[2]

ax = pd.DataFrame(y).plot(figsize=(12,5))

forecast_index = [i for i in range(pd.DataFrame(y).index.max()+1, pd.DataFrame(y).index.max()+ahead+1)]

pd.DataFrame(pred_ts_point, index=forecast_index).plot(label='forecast', ax=ax)

ax.fill_between(pd.DataFrame(pred_ts_interval, index=forecast_index).index,

pd.DataFrame(pred_ts_interval, index=forecast_index).iloc[:,0],

pd.DataFrame(pred_ts_interval, index=forecast_index).iloc[:,1], color='k', alpha=0.15)

plt.legend(['observed', 'forecast'])

display(fit.summary2())

plt.figure(figsize=(12,3))

statsmodels.graphics.tsaplots.plot_acf(y, lags=50, zero=True, use_vlines=True, alpha=0.05, ax=plt.subplot(121))

statsmodels.graphics.tsaplots.plot_pacf(y, lags=50, zero=True, use_vlines=True, alpha=0.05, ax=plt.subplot(122))

plt.show()

| Model: | ARMA | BIC: | 2796.4388 |

| Dependent Variable: | y | Log-Likelihood: | -1377.5 |

| Date: | 2020-07-31 22:45 | Scale: | 1.0000 |

| No. Observations: | 1000 | Method: | css-mle |

| Df Model: | 5 | Sample: | 0 |

| Df Residuals: | 995 | 0 | |

| Converged: | 1.0000 | S.D. of innovations: | 0.958 |

| No. Iterations: | 12.0000 | HQIC: | 2778.184 |

| AIC: | 2766.9923 |

| Coef. | Std.Err. | t | P>|t| | [0.025 | 0.975] | |

|---|---|---|---|---|---|---|

| const | 0.0093 | 0.0374 | 0.2500 | 0.8026 | -0.0639 | 0.0826 |

| ar.L1.y | 0.7470 | 0.0279 | 26.7627 | 0.0000 | 0.6923 | 0.8017 |

| ar.L2.y | -0.2521 | 0.0362 | -6.9600 | 0.0000 | -0.3231 | -0.1811 |

| ar.L3.y | 0.1631 | 0.0362 | 4.4995 | 0.0000 | 0.0920 | 0.2341 |

| ar.L4.y | -0.4699 | 0.0279 | -16.8256 | 0.0000 | -0.5247 | -0.4152 |

| Real | Imaginary | Modulus | Frequency | |

|---|---|---|---|---|

| AR.1 | 0.8756 | -0.6261 | 1.0764 | -0.0988 |

| AR.2 | 0.8756 | 0.6261 | 1.0764 | 0.0988 |

| AR.3 | -0.7021 | -1.1592 | 1.3553 | -0.3367 |

| AR.4 | -0.7021 | 1.1592 | 1.3553 | 0.3367 |

### ARMA(0,1) = MA(1)

# Setting

np.random.seed(123)

ar_params = np.array([])

ma_params = np.array([0.65])

index_name = ['const', 'ma(1)']

ahead = 100

ar, ma = np.r_[1, -ar_params], np.r_[1, ma_params]

ar_order, ma_order = len(ar)-1, len(ma)-1

# Generator

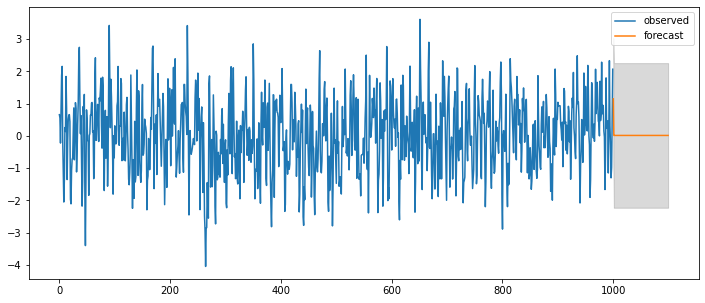

y = statsmodels.tsa.arima_process.arma_generate_sample(ar, ma, nsample=1000, burnin=500)

fit = statsmodels.tsa.arima_model.ARMA(y, (ar_order,ma_order)).fit(trend='c', disp=0)

pred_ts_point = fit.forecast(steps=ahead)[0]

pred_ts_interval = fit.forecast(steps=ahead)[2]

ax = pd.DataFrame(y).plot(figsize=(12,5))

forecast_index = [i for i in range(pd.DataFrame(y).index.max()+1, pd.DataFrame(y).index.max()+ahead+1)]

pd.DataFrame(pred_ts_point, index=forecast_index).plot(label='forecast', ax=ax)

ax.fill_between(pd.DataFrame(pred_ts_interval, index=forecast_index).index,

pd.DataFrame(pred_ts_interval, index=forecast_index).iloc[:,0],

pd.DataFrame(pred_ts_interval, index=forecast_index).iloc[:,1], color='k', alpha=0.15)

plt.legend(['observed', 'forecast'])

display(fit.summary2())

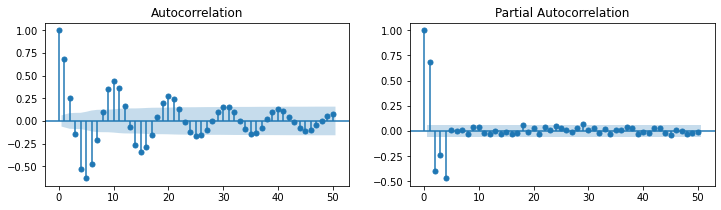

plt.figure(figsize=(12,3))

statsmodels.graphics.tsaplots.plot_acf(y, lags=50, zero=True, use_vlines=True, alpha=0.05, ax=plt.subplot(121))

statsmodels.graphics.tsaplots.plot_pacf(y, lags=50, zero=True, use_vlines=True, alpha=0.05, ax=plt.subplot(122))

plt.show()

| Model: | ARMA | BIC: | 2775.2511 |

| Dependent Variable: | y | Log-Likelihood: | -1377.3 |

| Date: | 2020-07-31 22:45 | Scale: | 1.0000 |

| No. Observations: | 1000 | Method: | css-mle |

| Df Model: | 2 | Sample: | 0 |

| Df Residuals: | 998 | 0 | |

| Converged: | 1.0000 | S.D. of innovations: | 0.959 |

| No. Iterations: | 3.0000 | HQIC: | 2766.124 |

| AIC: | 2760.5278 |

| Coef. | Std.Err. | t | P>|t| | [0.025 | 0.975] | |

|---|---|---|---|---|---|---|

| const | 0.0157 | 0.0500 | 0.3146 | 0.7531 | -0.0823 | 0.1138 |

| ma.L1.y | 0.6508 | 0.0246 | 26.5050 | 0.0000 | 0.6027 | 0.6989 |

| Real | Imaginary | Modulus | Frequency | |

|---|---|---|---|---|

| MA.1 | -1.5365 | 0.0000 | 1.5365 | 0.5000 |

### ARMA(0,2) = MA(2)

# Setting

np.random.seed(123)

ar_params = np.array([])

ma_params = np.array([0.65, -0.25])

index_name = ['const', 'ma(1)', 'ma(2)']

ahead = 100

ar, ma = np.r_[1, -ar_params], np.r_[1, ma_params]

ar_order, ma_order = len(ar)-1, len(ma)-1

# Generator

y = statsmodels.tsa.arima_process.arma_generate_sample(ar, ma, nsample=1000, burnin=500)

fit = statsmodels.tsa.arima_model.ARMA(y, (ar_order,ma_order)).fit(trend='c', disp=0)

pred_ts_point = fit.forecast(steps=ahead)[0]

pred_ts_interval = fit.forecast(steps=ahead)[2]

ax = pd.DataFrame(y).plot(figsize=(12,5))

forecast_index = [i for i in range(pd.DataFrame(y).index.max()+1, pd.DataFrame(y).index.max()+ahead+1)]

pd.DataFrame(pred_ts_point, index=forecast_index).plot(label='forecast', ax=ax)

ax.fill_between(pd.DataFrame(pred_ts_interval, index=forecast_index).index,

pd.DataFrame(pred_ts_interval, index=forecast_index).iloc[:,0],

pd.DataFrame(pred_ts_interval, index=forecast_index).iloc[:,1], color='k', alpha=0.15)

plt.legend(['observed', 'forecast'])

display(fit.summary2())

plt.figure(figsize=(12,3))

statsmodels.graphics.tsaplots.plot_acf(y, lags=50, zero=True, use_vlines=True, alpha=0.05, ax=plt.subplot(121))

statsmodels.graphics.tsaplots.plot_pacf(y, lags=50, zero=True, use_vlines=True, alpha=0.05, ax=plt.subplot(122))

plt.show()

| Model: | ARMA | BIC: | 2783.1398 |

| Dependent Variable: | y | Log-Likelihood: | -1377.8 |

| Date: | 2020-07-31 22:45 | Scale: | 1.0000 |

| No. Observations: | 1000 | Method: | css-mle |

| Df Model: | 3 | Sample: | 0 |

| Df Residuals: | 997 | 0 | |

| Converged: | 1.0000 | S.D. of innovations: | 0.959 |

| No. Iterations: | 8.0000 | HQIC: | 2770.970 |

| AIC: | 2763.5088 |

| Coef. | Std.Err. | t | P>|t| | [0.025 | 0.975] | |

|---|---|---|---|---|---|---|

| const | 0.0130 | 0.0425 | 0.3053 | 0.7602 | -0.0703 | 0.0962 |

| ma.L1.y | 0.6501 | 0.0310 | 20.9416 | 0.0000 | 0.5892 | 0.7109 |

| ma.L2.y | -0.2487 | 0.0307 | -8.0906 | 0.0000 | -0.3090 | -0.1885 |

| Real | Imaginary | Modulus | Frequency | |

|---|---|---|---|---|

| MA.1 | -1.0865 | 0.0000 | 1.0865 | 0.5000 |

| MA.2 | 3.7001 | 0.0000 | 3.7001 | 0.0000 |

### ARMA(0,4) = MA(4)

# Setting

np.random.seed(123)

ar_params = np.array([])

ma_params = np.array([0.65, -0.25, 0.5, -0.9])

index_name = ['const', 'ma(1)', 'ma(2)', 'ma(3)', 'ma(4)']

ahead = 100

ar, ma = np.r_[1, -ar_params], np.r_[1, ma_params]

ar_order, ma_order = len(ar)-1, len(ma)-1

# Generator

y = statsmodels.tsa.arima_process.arma_generate_sample(ar, ma, nsample=1000, burnin=500)

fit = statsmodels.tsa.arima_model.ARMA(y, (ar_order,ma_order)).fit(trend='c', disp=0)

pred_ts_point = fit.forecast(steps=ahead)[0]

pred_ts_interval = fit.forecast(steps=ahead)[2]

ax = pd.DataFrame(y).plot(figsize=(12,5))

forecast_index = [i for i in range(pd.DataFrame(y).index.max()+1, pd.DataFrame(y).index.max()+ahead+1)]

pd.DataFrame(pred_ts_point, index=forecast_index).plot(label='forecast', ax=ax)

ax.fill_between(pd.DataFrame(pred_ts_interval, index=forecast_index).index,

pd.DataFrame(pred_ts_interval, index=forecast_index).iloc[:,0],

pd.DataFrame(pred_ts_interval, index=forecast_index).iloc[:,1], color='k', alpha=0.15)

plt.legend(['observed', 'forecast'])

display(fit.summary2())

plt.figure(figsize=(12,3))

statsmodels.graphics.tsaplots.plot_acf(y, lags=50, zero=True, use_vlines=True, alpha=0.05, ax=plt.subplot(121))

statsmodels.graphics.tsaplots.plot_pacf(y, lags=50, zero=True, use_vlines=True, alpha=0.05, ax=plt.subplot(122))

plt.show()

| Model: | ARMA | BIC: | 3472.2476 |

| Dependent Variable: | y | Log-Likelihood: | -1715.4 |

| Date: | 2020-07-31 22:45 | Scale: | 1.0000 |

| No. Observations: | 1000 | Method: | css-mle |

| Df Model: | 5 | Sample: | 0 |

| Df Residuals: | 995 | 0 | |

| Converged: | 1.0000 | S.D. of innovations: | 1.344 |

| No. Iterations: | 12.0000 | HQIC: | 3453.993 |

| AIC: | 3442.8011 |

| Coef. | Std.Err. | t | P>|t| | [0.025 | 0.975] | |

|---|---|---|---|---|---|---|

| const | 0.0079 | 0.0297 | 0.2653 | 0.7908 | -0.0504 | 0.0662 |

| ma.L1.y | -0.0418 | 0.0275 | -1.5186 | 0.1289 | -0.0957 | 0.0121 |

| ma.L2.y | 0.2823 | 0.0276 | 10.2186 | 0.0000 | 0.2282 | 0.3365 |

| ma.L3.y | -0.0569 | 0.0273 | -2.0805 | 0.0375 | -0.1105 | -0.0033 |

| ma.L4.y | -0.4858 | 0.0279 | -17.4311 | 0.0000 | -0.5404 | -0.4311 |

| Real | Imaginary | Modulus | Frequency | |

|---|---|---|---|---|

| MA.1 | 1.2761 | -0.0000 | 1.2761 | -0.0000 |

| MA.2 | -0.0088 | -1.0828 | 1.0829 | -0.2513 |

| MA.3 | -0.0088 | 1.0828 | 1.0829 | 0.2513 |

| MA.4 | -1.3757 | -0.0000 | 1.3757 | -0.5000 |

### ARMA(1,1)

# Setting

np.random.seed(123)

ar_params = np.array([0.75])

ma_params = np.array([0.65])

index_name = ['const', 'ar(1)', 'ma(1)']

ahead = 100

ar, ma = np.r_[1, -ar_params], np.r_[1, ma_params]

ar_order, ma_order = len(ar)-1, len(ma)-1

# Generator

y = statsmodels.tsa.arima_process.arma_generate_sample(ar, ma, nsample=1000, burnin=500)

fit = statsmodels.tsa.arima_model.ARMA(y, (ar_order,ma_order)).fit(trend='c', disp=0)

pred_ts_point = fit.forecast(steps=ahead)[0]

pred_ts_interval = fit.forecast(steps=ahead)[2]

ax = pd.DataFrame(y).plot(figsize=(12,5))

forecast_index = [i for i in range(pd.DataFrame(y).index.max()+1, pd.DataFrame(y).index.max()+ahead+1)]

pd.DataFrame(pred_ts_point, index=forecast_index).plot(label='forecast', ax=ax)

ax.fill_between(pd.DataFrame(pred_ts_interval, index=forecast_index).index,

pd.DataFrame(pred_ts_interval, index=forecast_index).iloc[:,0],

pd.DataFrame(pred_ts_interval, index=forecast_index).iloc[:,1], color='k', alpha=0.15)

plt.legend(['observed', 'forecast'])

display(fit.summary2())

plt.figure(figsize=(12,3))

statsmodels.graphics.tsaplots.plot_acf(y, lags=50, zero=True, use_vlines=True, alpha=0.05, ax=plt.subplot(121))

statsmodels.graphics.tsaplots.plot_pacf(y, lags=50, zero=True, use_vlines=True, alpha=0.05, ax=plt.subplot(122))

plt.show()

| Model: | ARMA | BIC: | 2783.7601 |

| Dependent Variable: | y | Log-Likelihood: | -1378.1 |

| Date: | 2020-07-31 22:45 | Scale: | 1.0000 |

| No. Observations: | 1000 | Method: | css-mle |

| Df Model: | 3 | Sample: | 0 |

| Df Residuals: | 997 | 0 | |

| Converged: | 1.0000 | S.D. of innovations: | 0.959 |

| No. Iterations: | 9.0000 | HQIC: | 2771.590 |

| AIC: | 2764.1291 |

| Coef. | Std.Err. | t | P>|t| | [0.025 | 0.975] | |

|---|---|---|---|---|---|---|

| const | 0.0641 | 0.1970 | 0.3252 | 0.7450 | -0.3220 | 0.4501 |

| ar.L1.y | 0.7465 | 0.0224 | 33.3502 | 0.0000 | 0.7027 | 0.7904 |

| ma.L1.y | 0.6519 | 0.0261 | 24.9637 | 0.0000 | 0.6007 | 0.7031 |

| Real | Imaginary | Modulus | Frequency | |

|---|---|---|---|---|

| AR.1 | 1.3395 | 0.0000 | 1.3395 | 0.0000 |

| MA.1 | -1.5340 | 0.0000 | 1.5340 | 0.5000 |

### ARMA(2,2)

# Setting

np.random.seed(123)

ar_params = np.array([0.75, -0.25])

ma_params = np.array([0.65, 0.5])

index_name = ['const', 'ar(1)', 'ar(2)', 'ma(1)', 'ma(2)']

ahead = 100

ar, ma = np.r_[1, -ar_params], np.r_[1, ma_params]

ar_order, ma_order = len(ar)-1, len(ma)-1

# Generator

y = statsmodels.tsa.arima_process.arma_generate_sample(ar, ma, nsample=1000, burnin=500)

fit = statsmodels.tsa.arima_model.ARMA(y, (ar_order,ma_order)).fit(trend='c', disp=0)

pred_ts_point = fit.forecast(steps=ahead)[0]

pred_ts_interval = fit.forecast(steps=ahead)[2]

ax = pd.DataFrame(y).plot(figsize=(12,5))

forecast_index = [i for i in range(pd.DataFrame(y).index.max()+1, pd.DataFrame(y).index.max()+ahead+1)]

pd.DataFrame(pred_ts_point, index=forecast_index).plot(label='forecast', ax=ax)

ax.fill_between(pd.DataFrame(pred_ts_interval, index=forecast_index).index,

pd.DataFrame(pred_ts_interval, index=forecast_index).iloc[:,0],

pd.DataFrame(pred_ts_interval, index=forecast_index).iloc[:,1], color='k', alpha=0.15)

plt.legend(['observed', 'forecast'])

display(fit.summary2())

plt.figure(figsize=(12,3))

statsmodels.graphics.tsaplots.plot_acf(y, lags=50, zero=True, use_vlines=True, alpha=0.05, ax=plt.subplot(121))

statsmodels.graphics.tsaplots.plot_pacf(y, lags=50, zero=True, use_vlines=True, alpha=0.05, ax=plt.subplot(122))

plt.show()

| Model: | ARMA | BIC: | 2796.4977 |

| Dependent Variable: | y | Log-Likelihood: | -1377.5 |

| Date: | 2020-07-31 22:45 | Scale: | 1.0000 |

| No. Observations: | 1000 | Method: | css-mle |

| Df Model: | 5 | Sample: | 0 |

| Df Residuals: | 995 | 0 | |

| Converged: | 1.0000 | S.D. of innovations: | 0.958 |

| No. Iterations: | 12.0000 | HQIC: | 2778.243 |

| AIC: | 2767.0512 |

| Coef. | Std.Err. | t | P>|t| | [0.025 | 0.975] | |